Welcome to the DP-700 Official Practice Test – Part 4.

In this part, I have given my detailed explanations of the 10 official questions from Microsoft. Unlike on the Microsoft website, the explanations include screenshots to help you prepare for the DP-700 exam.

That said, these tests are very simple, and they should only be used to brush up on the basics. The real exam would rarely be this easy. To get more rigorous practice and even in-depth knowledge, check out my practice tests (click the button below).

Once done, check out the DP-700 questions Part 5 and the accompanying DP-700 Practice Test video.

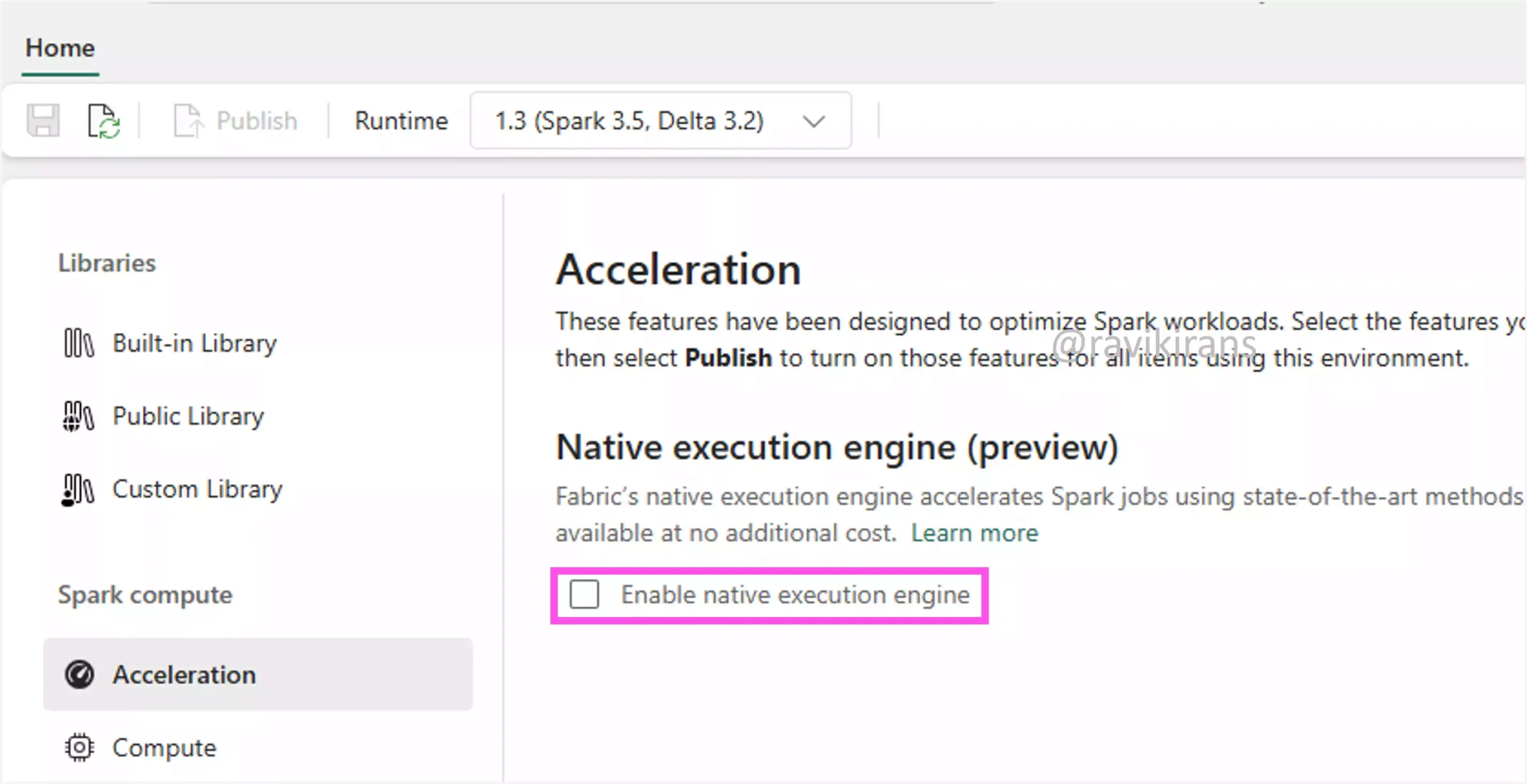

31] A company uses Microsoft Fabric to manage large-scale data processing tasks. Recently, the team experienced performance issues with Spark jobs involving complex transformations and aggregations on Delta format data.

You need to enhance performance of these Spark jobs without modifying the existing codebase.

What should you do?

A. Enable adaptive query execution.

B. Enable dynamic allocation.

C. Enable high concurrency mode.

D. Enable native execution engine.

The Native Execution Engine in Microsoft Fabric is an optimized engine that accelerates the performance of Spark workloads, especially those involving complex transformations and aggregations, without requiring code changes. When you create a new environment in Fabric, you can enable the native execution engine.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-engineering/native-execution-engine-overview?tabs=sparksql#when-to-use-the-native-execution-engine

Option D is the correct answer.

Option A is incorrect. Adaptive Query Execution (AQE) in Fabric optimizes queries at runtime using statistics, improving performance, especially on large or skewed datasets.

Reference Link: https://learn.microsoft.com/en-us/azure/databricks/optimizations/aqe

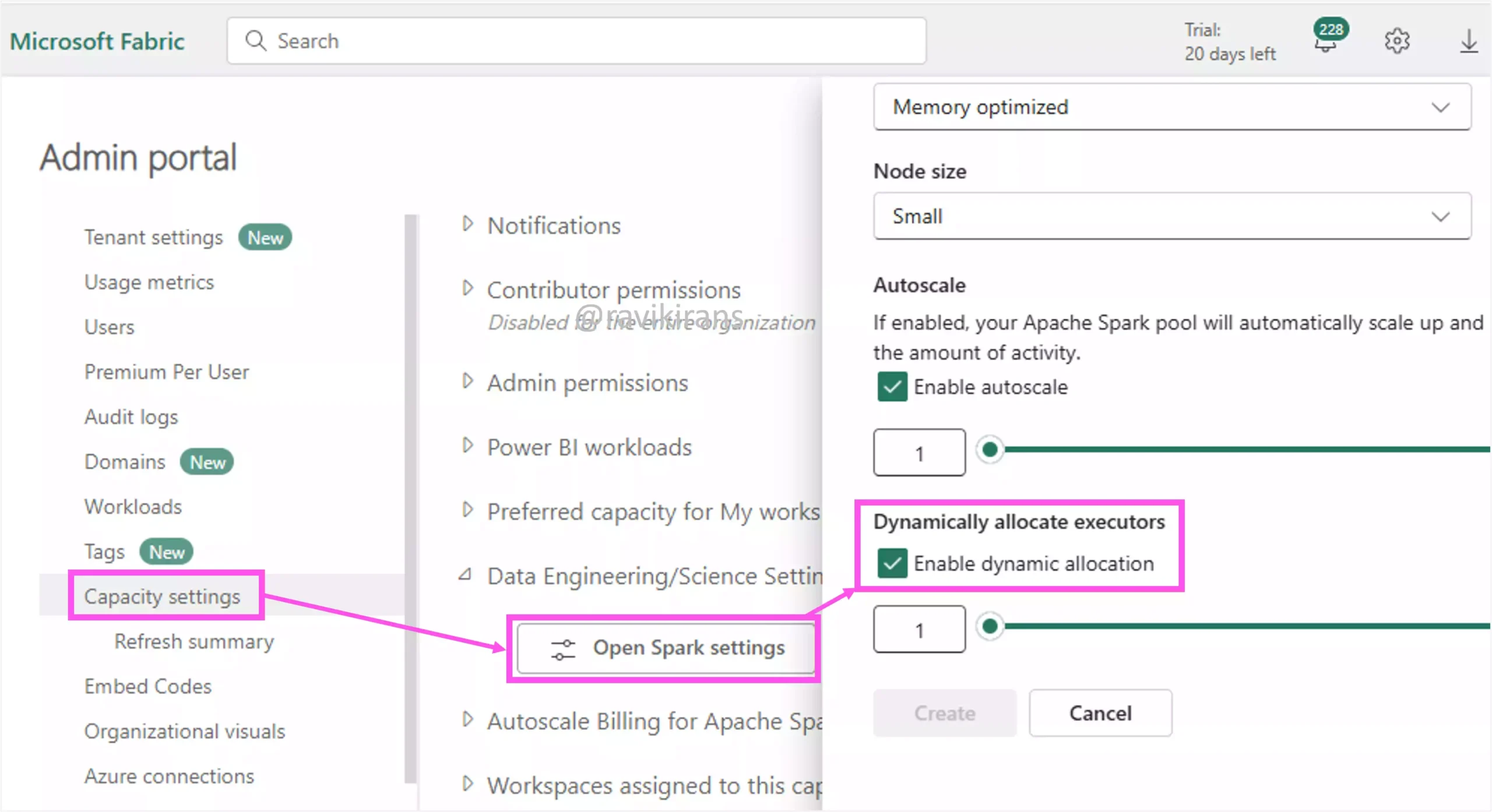

Option B is incorrect. Dynamic allocation improves resource efficiency by adjusting executors at runtime, but it doesn’t significantly boost performance for complex Delta transformations or aggregations.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-engineering/spark-compute#dynamic-allocation

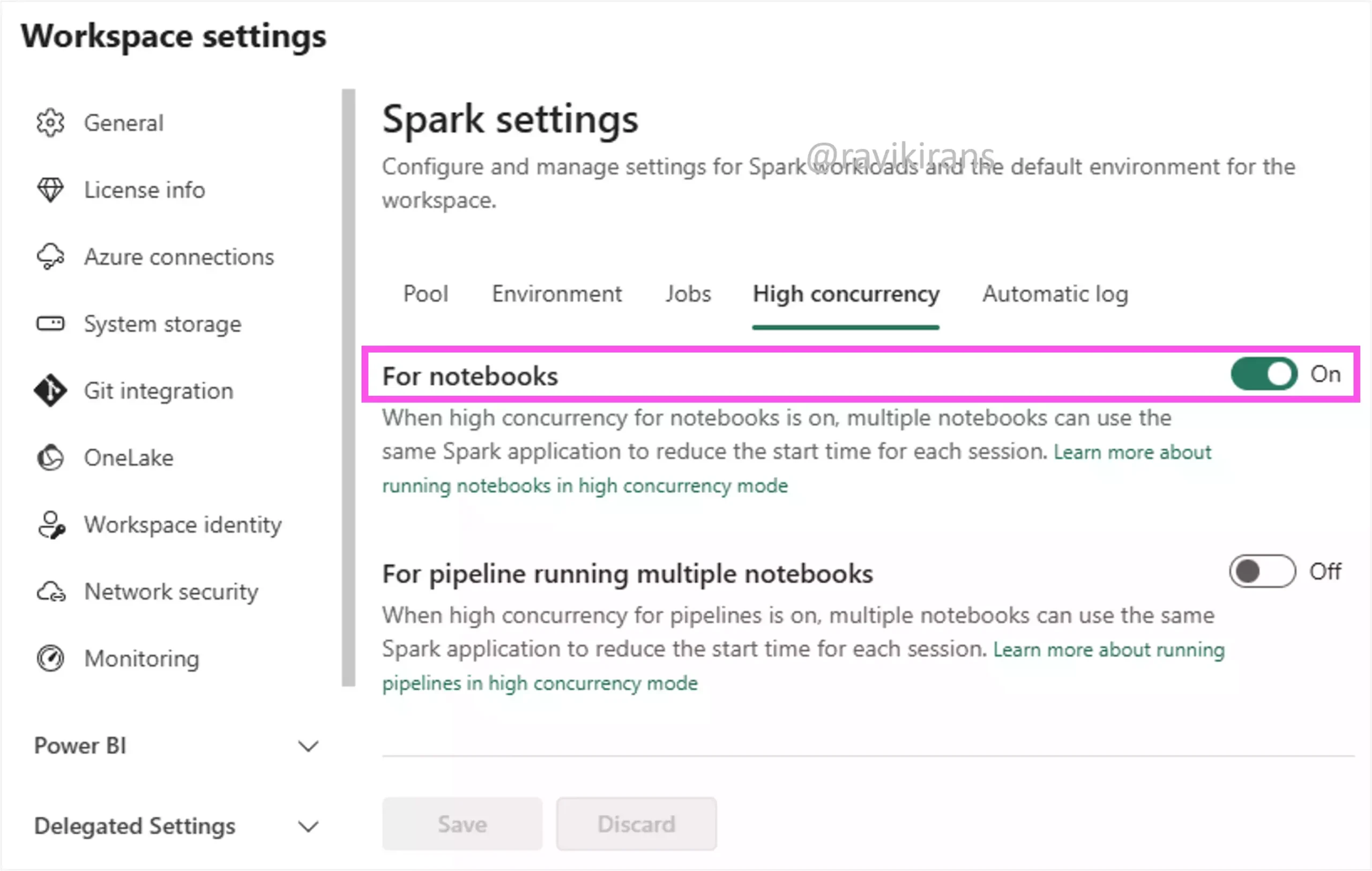

Option C is incorrect. When you enable high concurrency, multiple notebooks can use the same Spark application to reduce the start time for each session. However, it doesn’t improve performance for individual complex Spark jobs.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-engineering/high-concurrency-overview

32] Your company uses Microsoft Fabric for its data warehouse, loading large datasets from Azure Blob Storage.

You need to ensure efficient data ingestion with minimal errors.

What should you do?

A. Enable data partitioning before loading.

B. Use a single INSERT statement for each row.

C. Use COPY statement with wildcards.

D. Use multiple INSERT statements for each row.

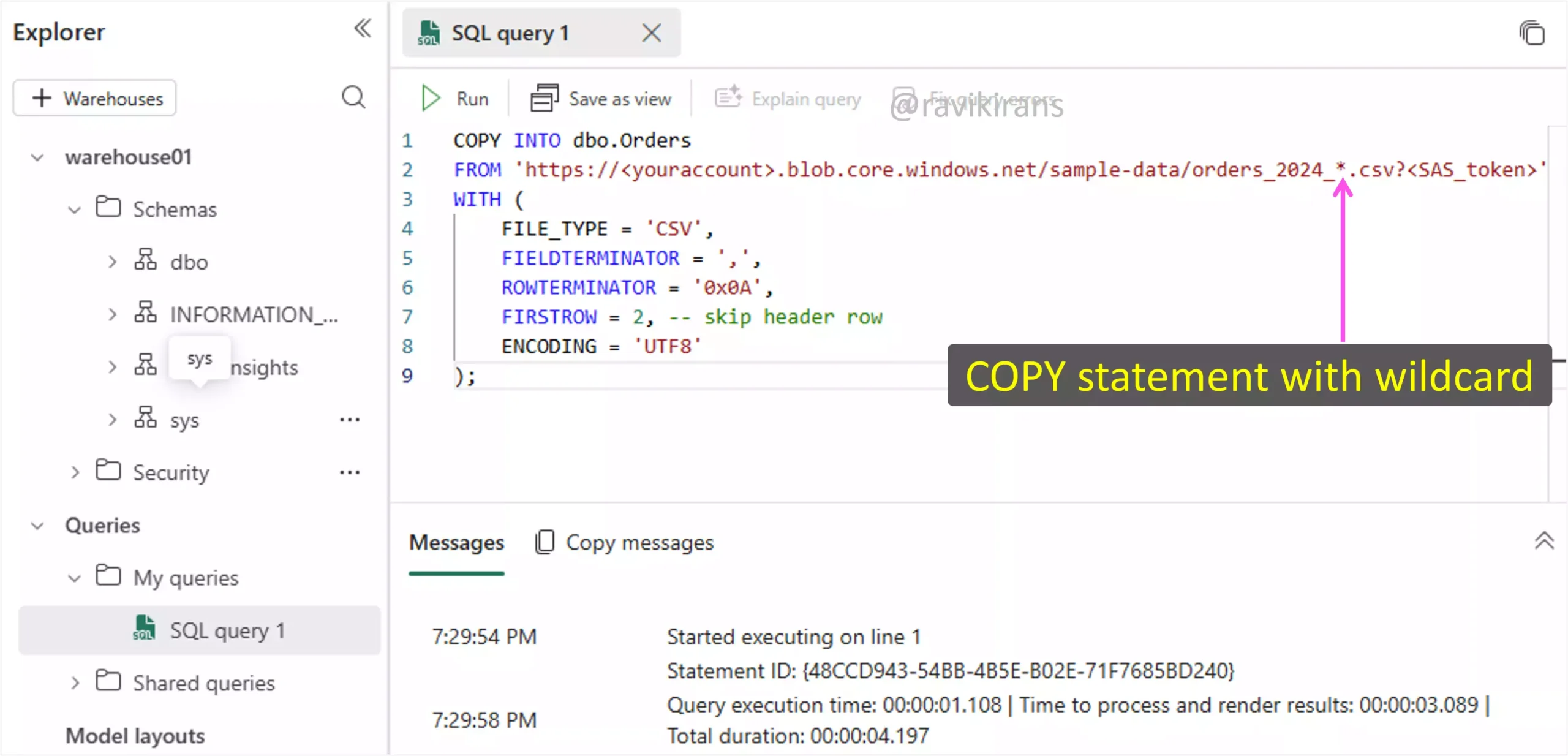

The most efficient approach to load large datasets from Azure Blob Storage is to use the COPY statement with wildcards, as shown below. The wildcards allow you to load multiple files from Blob Storage in a single operation.

Option C is the correct answer.

Option A is incorrect. Partitioning improves query performance after loading but doesn’t enhance data ingestion efficiency.

Options B and D do not make any sense.

33] Your organization uses Microsoft Fabric Lakehouse to store and analyze large datasets. Recently, the team noticed increased storage costs due to old files that are no longer needed for current operations.

You need to reduce storage costs by removing unnecessary files while maintaining data integrity.

What should you do?

A. Enable V-Order compression.

B. Reduce file retention period.

C. Use the OPTIMIZE command.

D. Use VACUUM command.

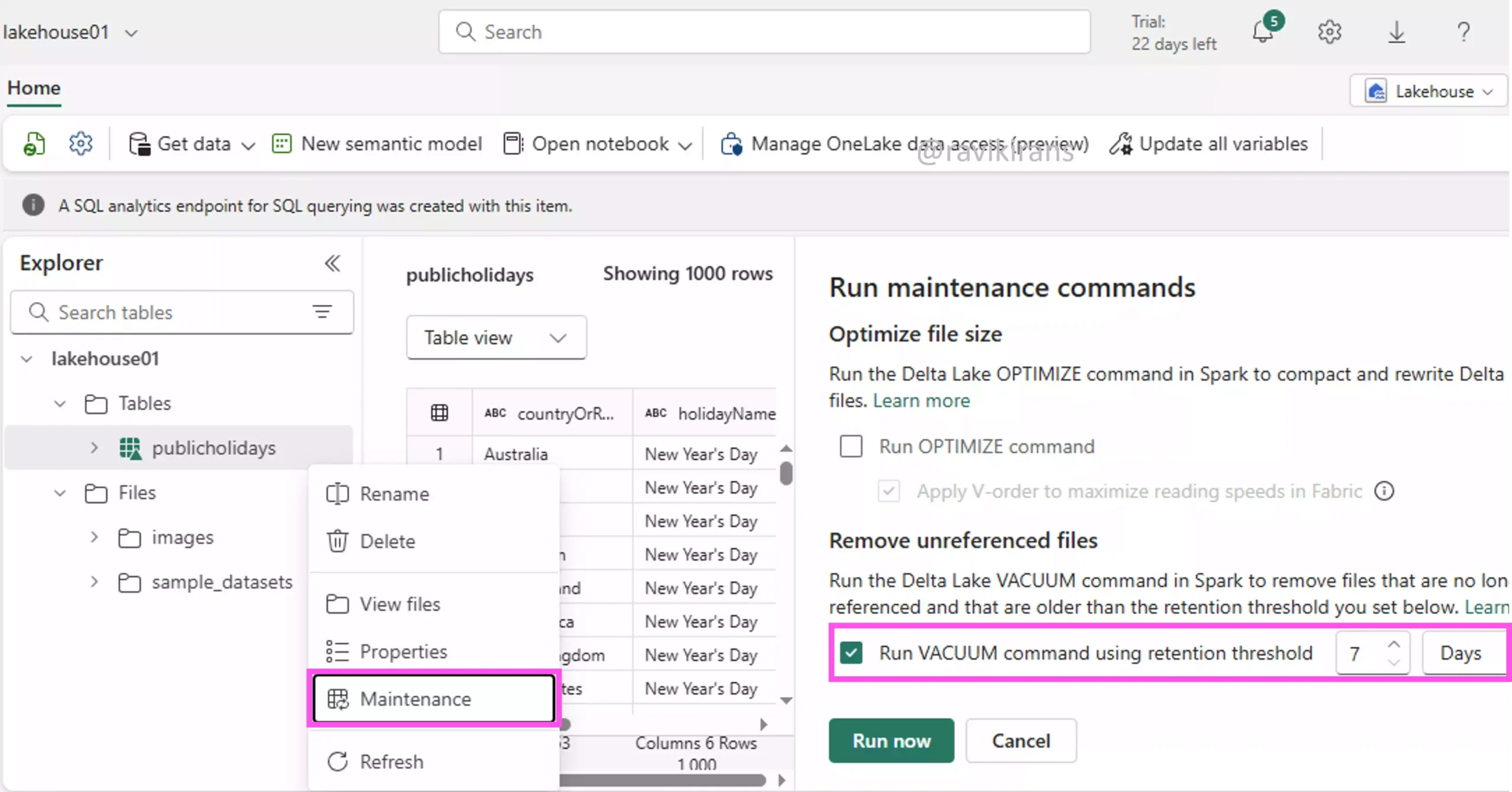

The VACUUM command removes old files no longer referenced by a Delta table log. Select the table on which you want to run the command and navigate to Maintenance to find the option to run the VACUUM command.

You can also run the command using the Fabric notebooks. Option D is the correct answer.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-table-maintenance#table-maintenance-operations

All other options are incorrect.

V-Order compression boosts query speed by reorganizing file layout, but doesn’t clear old data.

Reducing file retention might lower storage use, but risks losing recovery snapshots.

OPTIMIZE merges small files for performance but doesn’t remove outdated data.

34] Your company uses Microsoft Fabric Lakehouse to manage large datasets for analytics. Recently, query performance on a Delta table has degraded due to an increase in small Parquet files.

You need to optimize the Delta table to improve query efficiency.

Each correct answer presents part of the solution. Which two actions should you perform?

A. Apply V-Order for better data sorting and compression.

B. Convert to Hive table format.

C. Increase the number of partitions in the Delta table.

D. Use the OPTIMIZE command to consolidate files.

Option D is definitely correct. Use the OPTIMIZE command to merge many small Parquet files into fewer, larger ones.

But since we need to select two options, we can consider using V-Order too, which complements OPTIMIZE by improving how data is stored within those files. So if the query performance is still suboptimal (due to poorly ordered or compressed data), applying V-Order will further improve performance.

Option A is the other correct answer.

Option B is incorrect. Converting to Hive table format offers no advantage over Delta and may reduce feature compatibility.

Option C is incorrect. Increasing the number of partitions can hurt performance by creating more small files.

35] Your organization has implemented a Microsoft Fabric data warehouse to handle large-scale data ingestion and processing. The current setup involves frequent data loads throughout the day, which sometimes lead to performance bottlenecks.

You need to optimize the data ingestion process to handle large volumes efficiently without causing performance issues.

Each correct answer presents part of the solution. Which two actions should you take?

A. Divide large INSERT operations into smaller parts.

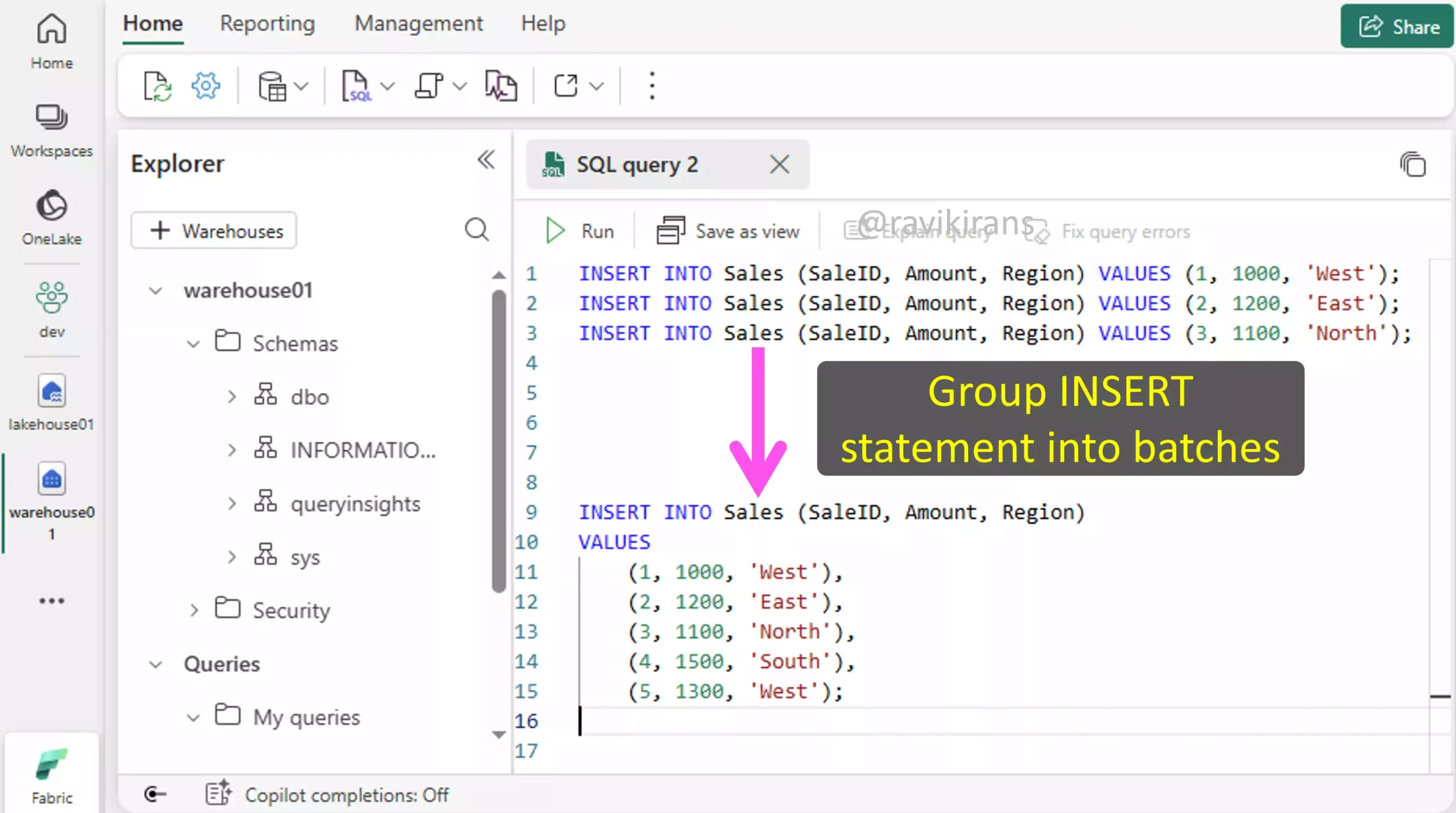

B. Group INSERT statements into batches.

C. Use larger data types for columns.

D. Use smaller data types for columns.

To reduce the frequency of data loads that lead to performance bottlenecks, you can group INSERT statements into batches.

This reduces overhead and improves performance by minimizing transaction costs. Option B is one of the correct answers.

Option A is exactly the opposite of option B. Since we claimed option B is correct, option A cannot be the correct answer. Splitting large INSERTs into many small ones increases overhead and uses more resources (for logging and locking).

Similar to options A and B, options C and D are also opposites. Between them, I would choose option D, as using smaller data types for columns will save storage and memory, boosting I/O and query speed, improving performance.

36] Your company is implementing real-time data processing using Spark Structured Streaming in Microsoft Fabric. Data from IoT devices needs to be stored in a Delta table.

You need to ensure efficient processing of streaming data while preventing errors due to data changes.

What should you do?

A. Enable overwrite mode.

B. Set checkpointLocation to null.

C. Use ignoreChanges.

D. Use the Append method.

The ignoreChanges option is supported only when reading data from the Delta Lake tables in structured streaming, i.e., using .readStream.

df = spark.readStream \

.format("delta") \

.option("ignoreChanges", "true") \

.load("/delta/iot_data")

If you use the ignoreChanges option, the stream will consider only new rows from the delta table. Updates to the existing rows will be ignored.

Given that the source is IoT devices. Further, ignoreChanges can only be used while reading data, not writing data to a Delta table. So, option C is incorrect.

Reference Link: https://medium.com/@sujathamudadla1213/what-is-ignorechanges-in-spark-structured-streaming-59697761c418

Since we need to prevent errors due to data changes, using Append is the correct choice as it adds only the new rows to the Delta table. Option D is the correct answer.

Option A is incorrect. The overwrite mode attempts to replace the entire table continuously, resulting in data loss.

Option B is incorrect as it disables checkpointing, which is critical for fault tolerance. It puts your streaming pipeline at risk of data loss.

37] Your company has set up a Microsoft Fabric lakehouse to store and analyze data from multiple sources, including Azure Event Hubs and local files. The data is used for generating Power BI reports.

You need to implement a loading pattern that supports both full and incremental data loads.

Which approach do you use?

A. Develop a custom ETL tool for data loads.

B. Use Azure Synapse Analytics for data loads.

C. Use Data Factory for incremental data loads.

D. Use Dataflows Gen2 for full and incremental data loads.

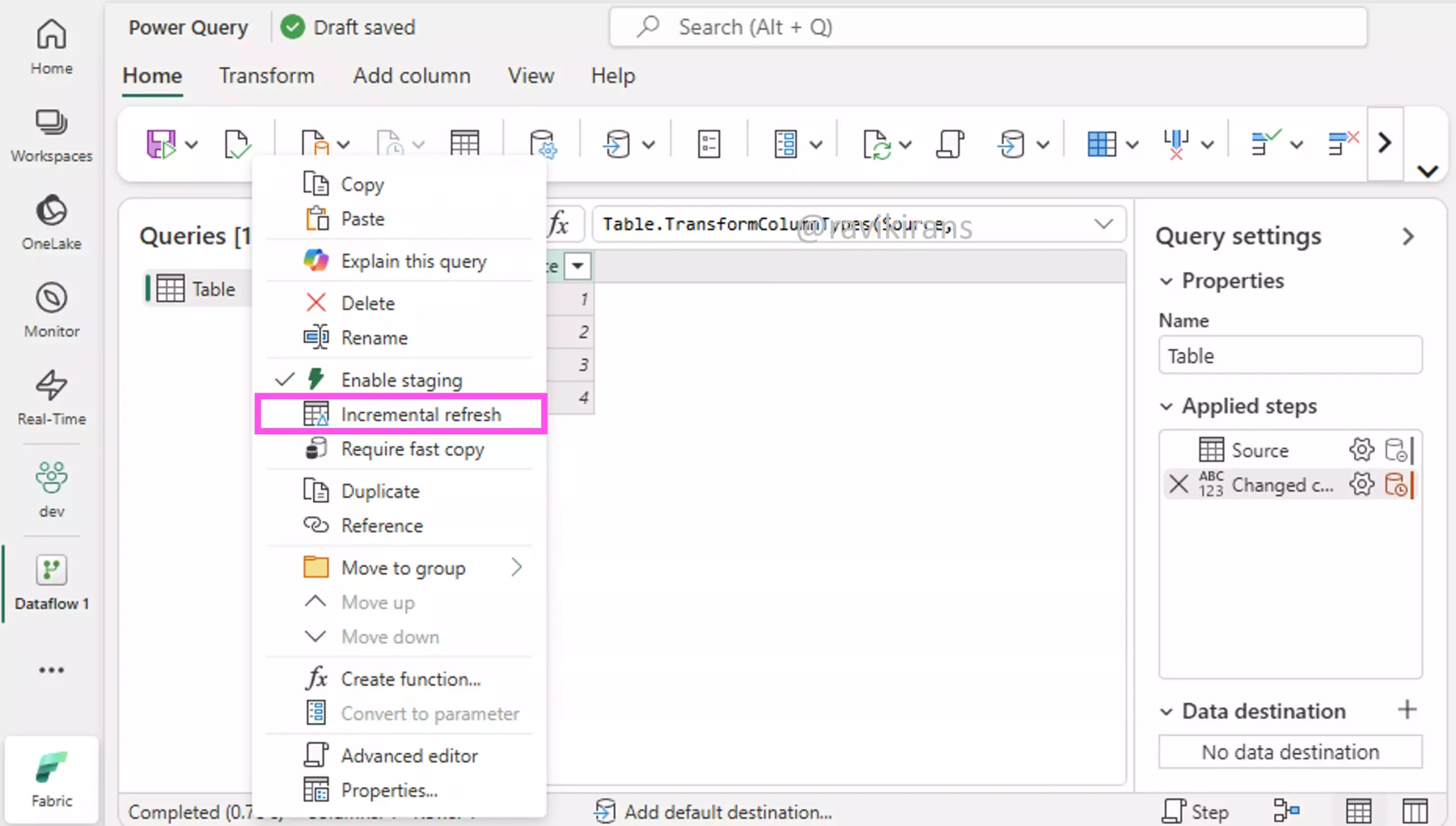

The best solution would be to choose a tool that is natively integrated with Microsoft Fabric. Dataflows Gen2 is natively integrated with Fabric and supports full and incremental data loads.

Option D is the best answer.

Option A is not a great choice as it requires high maintenance and does not leverage native Fabric capabilities.

Although Data Factory does support incremental data loads, Dataflows gen2 is a better choice than both Data Factory and Azure Synapse Analytics due to the native Fabric integration.

38] Your company has implemented a Microsoft Fabric lakehouse to store and analyze data from multiple sources. The data is used for generating Microsoft Power BI reports and requires regular updates.

You need to ensure that the data in the Microsoft Fabric lakehouse is updated incrementally to reflect changes from the source systems.

Which method should you use to achieve incremental updates?

A. Implement a watermark strategy.

B. Use a full data reload approach.

C. Use batch processing for updates.

D. Use Change Data Capture (CDC).

Currently, Change Data Capture (CDC) is not supported as a destination for Microsoft Fabric Lakehouse. Option D is incorrect.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-factory/cdc-copy-job#supported-connectors

The most practical way to perform incremental updates is to track a watermark column (e.g., LastModifiedDate) from the source. Tools like Dataflows Gen2 or the Copy Data activity in a pipeline support filtering based on a watermark.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-factory/tutorial-incremental-copy-data-warehouse-lakehouse

Option A is the correct answer.

Options B and C do not support incremental updates.

39] Your company experiences performance issues with its current ETL process, which involves loading large volumes of sales data into a fact table in a Microsoft Fabric data warehouse. The process currently uses a full reload strategy, which is time-consuming and resource-intensive.

You need to enhance the ETL process to boost performance and decrease resource usage.

Each correct answer presents part of the solution. Which two actions should you take?

A. Continue full reloads with increased compute resources.

B. Implement an incremental load strategy.

C. Manually partition the fact table.

D. Switch to Azure Data Factory for ETL.

E. Use Change Data Capture (CDC) for tracking changes.

Load only new or changed data instead of reloading everything, reducing processing time and compute usage. Use methods like watermarks, delta loads, or CDC based on source support.

Option B is one of the correct answers.

Additionally, per the document below, pipelines can stream changes “into Synapse Analytics or Microsoft Fabric Warehouse” from CDC-enabled source databases.

So, option E is the other correct answer.

Option A increases cost.

Option C is not required as Fabric automatically partitions tables.

Option D is not required when Fabric pipelines, dataflows gen2, can handle ETL tasks.

40] You have a large dataset stored in a Microsoft Fabric Lakehouse, including sales transactions from multiple regions.

You need to implement a strategy to ensure high data quality and support incremental data loads while maintaining data integrity and consistency.

Each correct answer presents part of the solution. Which two actions should you perform?

A. Adopt a medallion architecture for data organization.

B. Load data directly into the dimensional model without transformation.

C. Use Data Factory pipelines for data transformation.

D. Use Dataflows Gen2 for data transformation and cleaning.

Adopting a medallion architecture provides a structured, layered approach to managing large datasets in Fabric Lakehouse. This architecture decouples data ingestion from transformation, allowing incremental updates at each stage and enforcing data quality progressively, making it a best practice in Microsoft Fabric for analytics.

Option A is one of the correct answers.

Between Dataflows Gen2 and Data Factory pipelines, I would use Dataflows Gen2 for transformation and cleaning that ensures high data quality. I would use Pipelines to orchestrate those flows (e.g., update watermark). Further, dataflows also support incremental data load.

Option D is the other correct answer.

Option B is incorrect as loading data directly skips data validation and cleaning, risking poor data quality and integrity issues.

Check out my DP-700 practice tests.

Follow Me to Receive Updates on the DP-700 Exam

Want to be notified as soon as I post? Subscribe to the RSS feed / leave your email address in the subscribe section. Share the article on your social networks with the links below so it can benefit others.