Welcome to the DP-700 Official Practice Test – Part 2.

In this part, I have given my detailed explanations of the 10 official questions from Microsoft. Unlike on the Microsoft website, the explanations include screenshots to help you prepare for the DP-700 exam.

That said, these tests are very simple, and they should only be used to brush up on the basics. The real exam would rarely be this easy. To get more rigorous practice and even in-depth knowledge, check out my practice tests (click the button below).

Once done, check out the DP-700 questions Part-3 and the accompanying DP-700 Practice Test video.

11] Your company is implementing a new data governance strategy using Microsoft Fabric. The data warehouse contains various tables with sensitive information that must be protected from unauthorized access.

You need to ensure only authorized medical personnel can access the ‘MedicalHistory’ column in the ‘Patients’ table.

What should you use?

A. Column-Level Security

B. Dynamic Data Masking

C. Row-Level Security

D. Workspace Roles

Option A is the correct answer. Column-Level Security provides security at the column level. It lets you limit access to specific columns like ‘MedicalHistory’ in the ‘Patients’ table.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-warehouse/column-level-security

Option B is incorrect. Dynamic Data Masking masks data visually but doesn’t block access; users can still run queries on the column.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-warehouse/dynamic-data-masking

Option C is incorrect. Row-Level Security limits data visibility by row, not by columns.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-warehouse/row-level-security

Option D is incorrect. Workspace Roles control access to content in the workspace, like reports or datasets, not to specific columns in the table.

12] Your company uses a Microsoft Fabric data warehouse to store employee salary information.

You need to configure permissions so that only specific users can view unmasked salary data while others see masked data.

Which permission should you grant to the authorized users?

A. Grant ALTER ANY MASK permission.

B. Grant SELECT permission.

C. Grant UNMASK permission.

D. Grant VIEW DEFINITION permission.

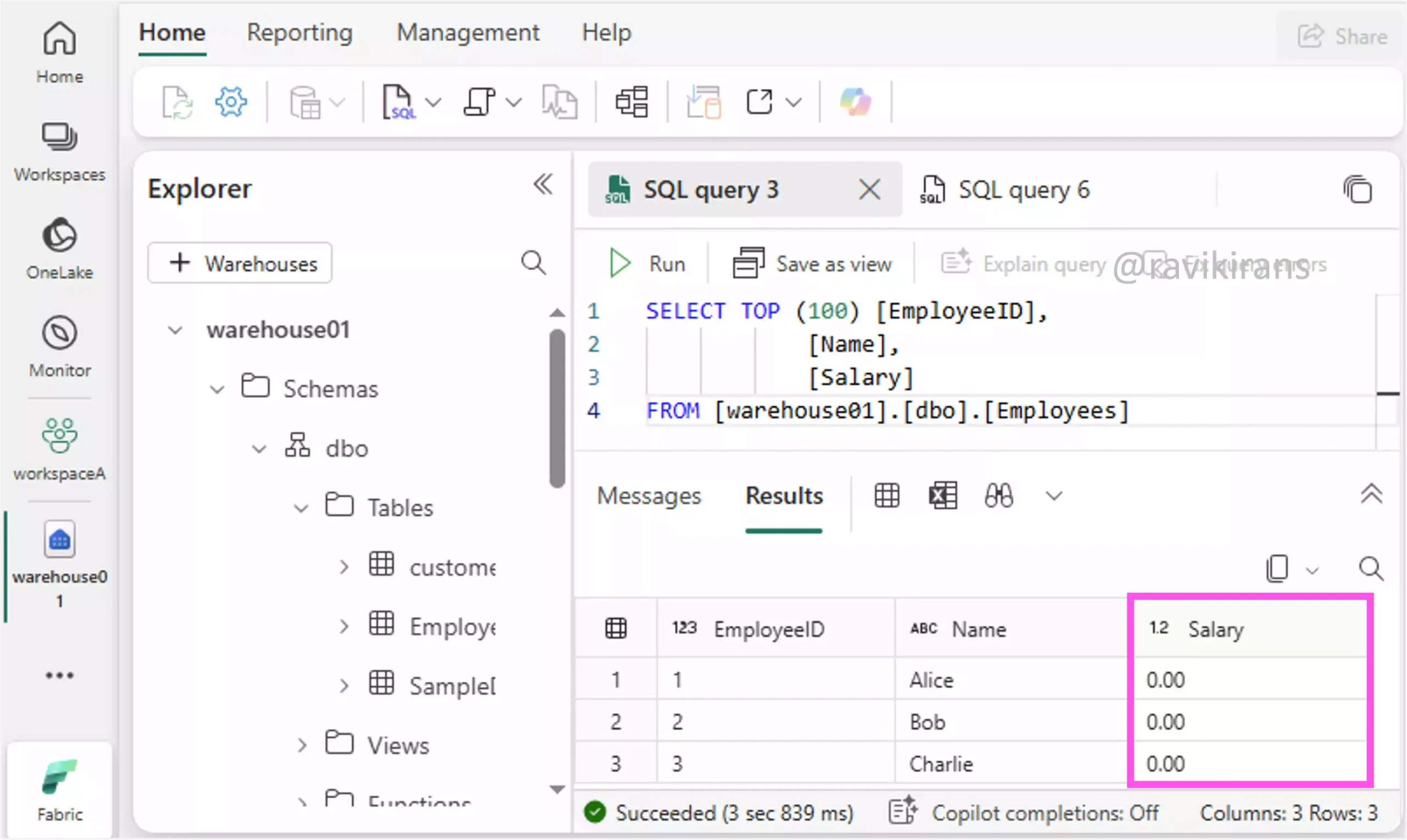

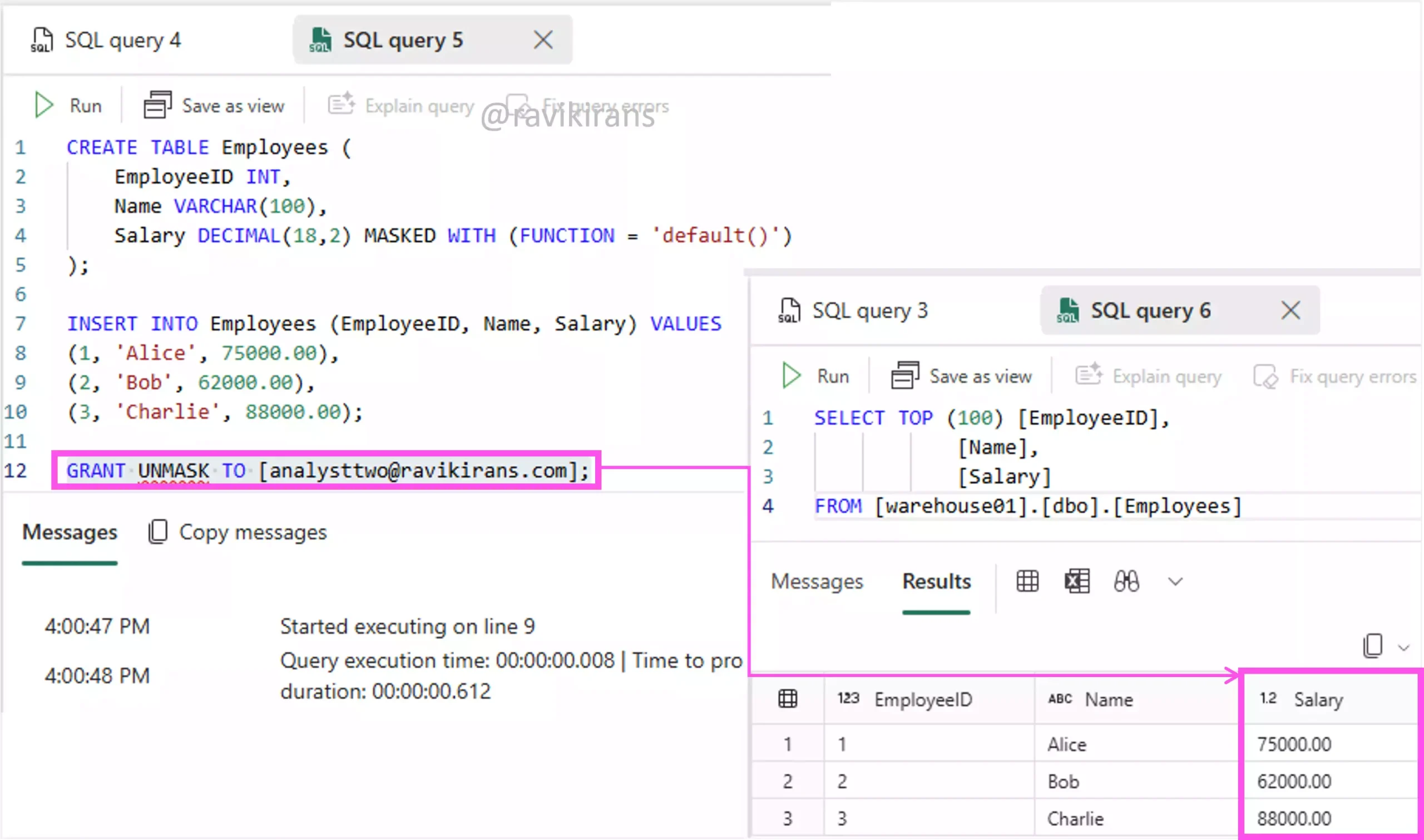

If a table is created with a masked salary column, the user will see 0s instead of the actual salary.

To enable the user to view the masked salary values, run the GRANT UNMASK t-SQL command for the user. After this, the granted user should be able to view the unmasked column.

Option C is the correct answer.

Option A is incorrect. ALTER ANY MASK permits the user to create or change masking policies but doesn’t grant access to unmasked data.

Reference Link: https://learn.microsoft.com/en-us/sql/relational-databases/security/dynamic-data-masking?view=sql-server-ver17#permissions

Option B is incorrect. SELECT enables users to read data, though they will see masked values if the UNMASK permission is not granted.

Option D is incorrect. VIEW DEFINITION allows users to view the database schema and metadata, not the actual data.

13] Your organization is using Microsoft Fabric to manage a data warehouse containing sensitive financial data, including columns such as AccountNumber, Balance, and TransactionHistory.

You need to ensure that only authorized finance team members can view the TransactionHistory column.

What should you do?

A. Column-Level Security

B. Data Encryption

C. Dynamic Data Masking

D. Row-Level Security

This question is very similar to question 11. If it relates to controlling access to columns, column-level security is the solution. Option A is the correct answer.

Option B is incorrect. Data Encryption secures data at rest or in transit, but doesn’t manage access to specific columns for users.

Option C is incorrect. Dynamic Data Masking masks data from unauthorized users but doesn’t strictly limit column access.

Option D is incorrect. Row-Level Security restricts access to specific rows, not columns.

14] Your company has a data warehouse in Microsoft Fabric to store sales data. Different departments need access to this data, but each department should only see data relevant to their operations.

You need to configure the data warehouse so each department can only view its own sales data.

Each correct answer presents part of the solution. Which two actions should you take?

Select all answers that apply.

A. Create a security policy with a filter predicate for departments.

B. Implement column-level security for Sales table access.

C. Implement column-level security for sensitive sales data.

D. Implement row-level security using department identifiers.

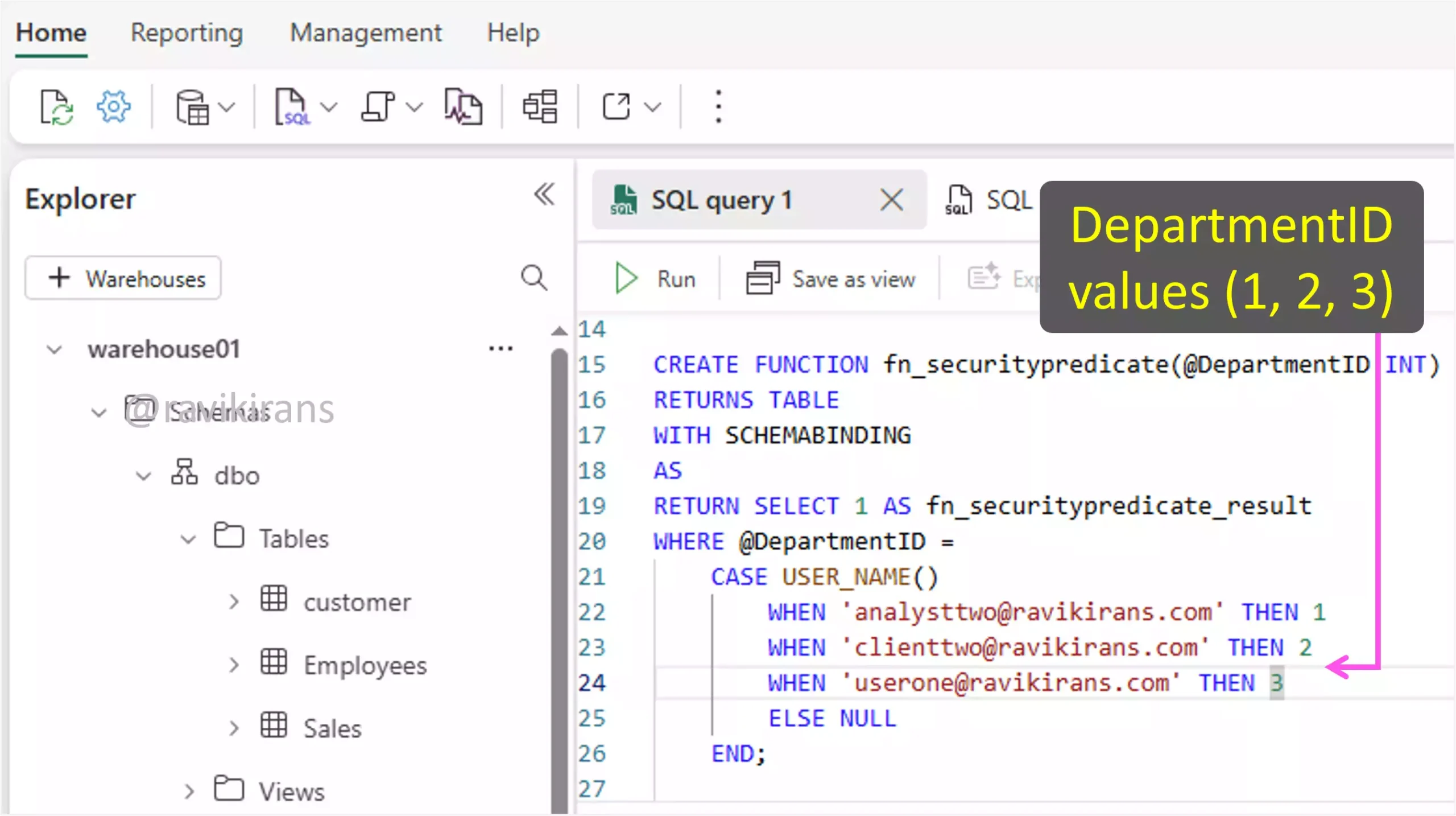

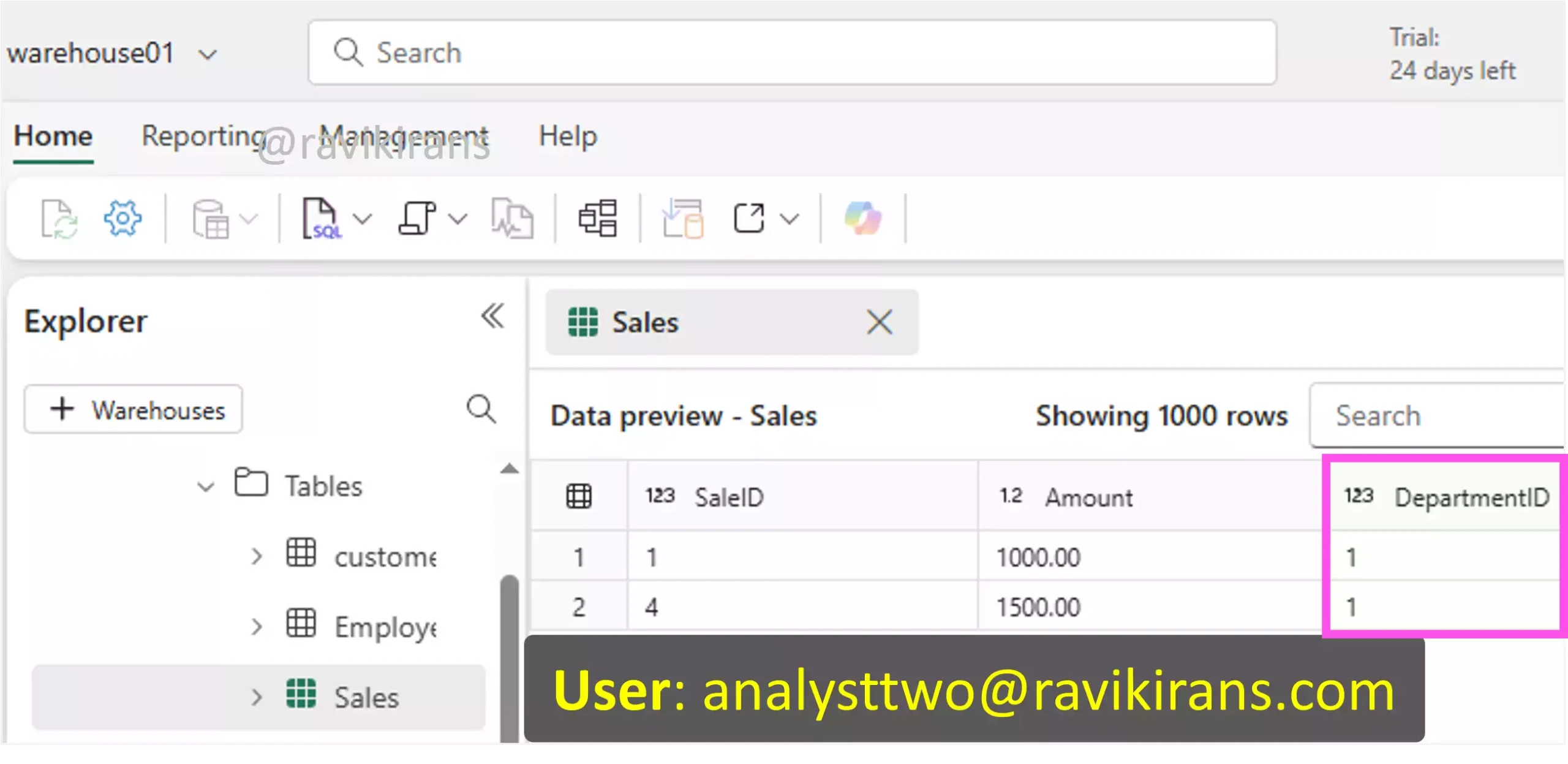

To ensure each department user can view only their sales data, first, create a filter function that defines the logic which will be used to filter rows based on the user’s identity.

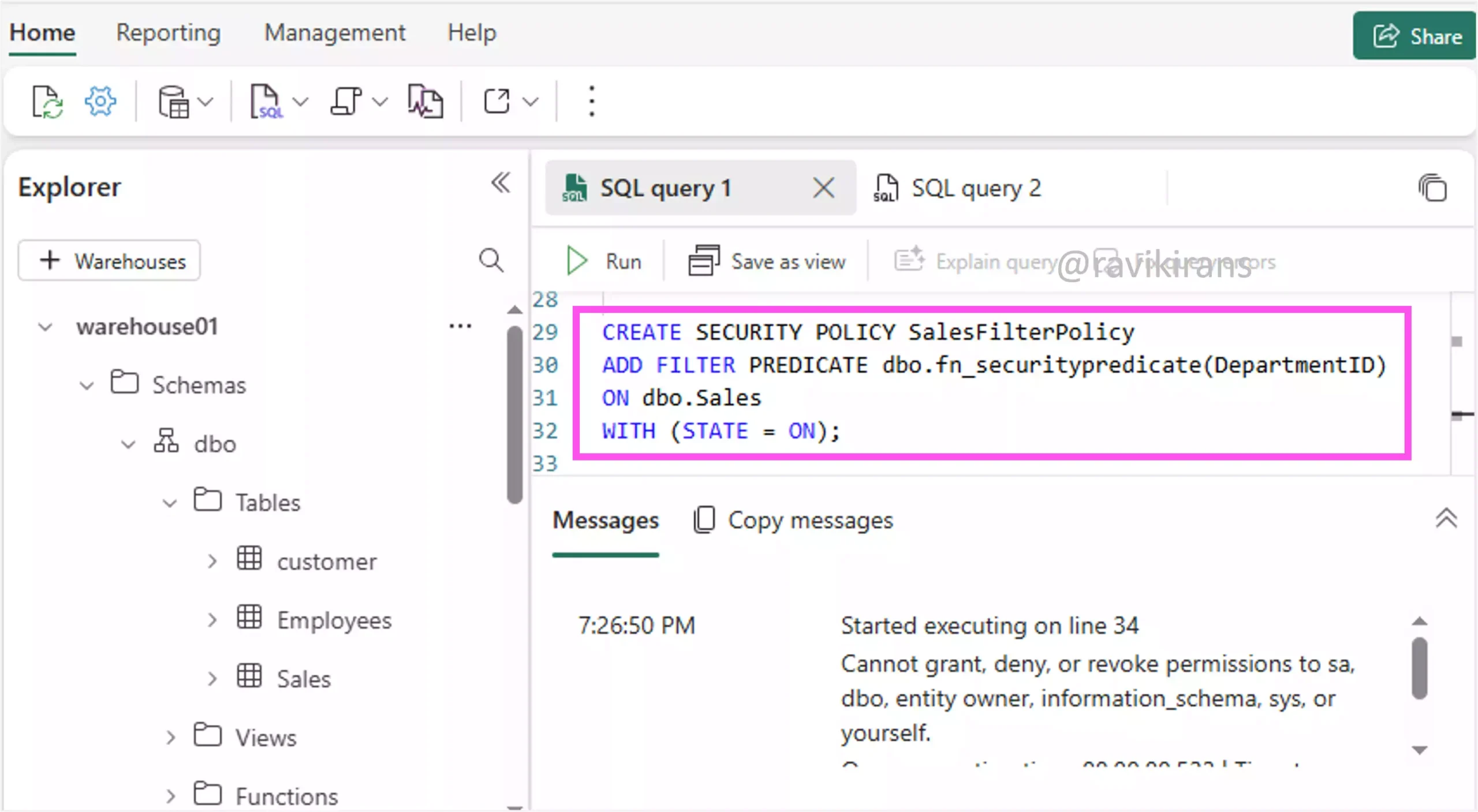

Next, create a security policy that applies this function to a table. This tells Microsoft Fabric to automatically filter rows from the Sales table based on the logic in your function.

This ensures that every time a user queries the Sales table, Fabric uses the function to filter out rows that don’t belong to that user’s department. For example, when the user analyst two logs in, he will only view rows that belong to DepartmentID 1 (as defined in the above function).

So, options A and D are the correct answers.

Options B and C are incorrect. Column-level restriction is not required.

15] Your organization uses Microsoft Fabric to manage a data warehouse containing sensitive customer information, including email addresses and credit card numbers.

You need to prevent nonprivileged users from viewing full email addresses and credit card numbers.

Each correct answer presents part of the solution. Which two actions should you perform?

A. Apply column-level security to restrict access to sensitive columns.

B. Implement row-level security to restrict access to sensitive data.

C. Use dynamic data masking on CreditCardNumber with partial() function.

D. Use dynamic data masking on Email with email() function.

Option A is incorrect. Column-level security restricts access to a column. But we need to allow access to the column, but prevent users from viewing full values.

Option B is incorrect. Row-level security restricts access to rows, not columns, such as email or credit card numbers.

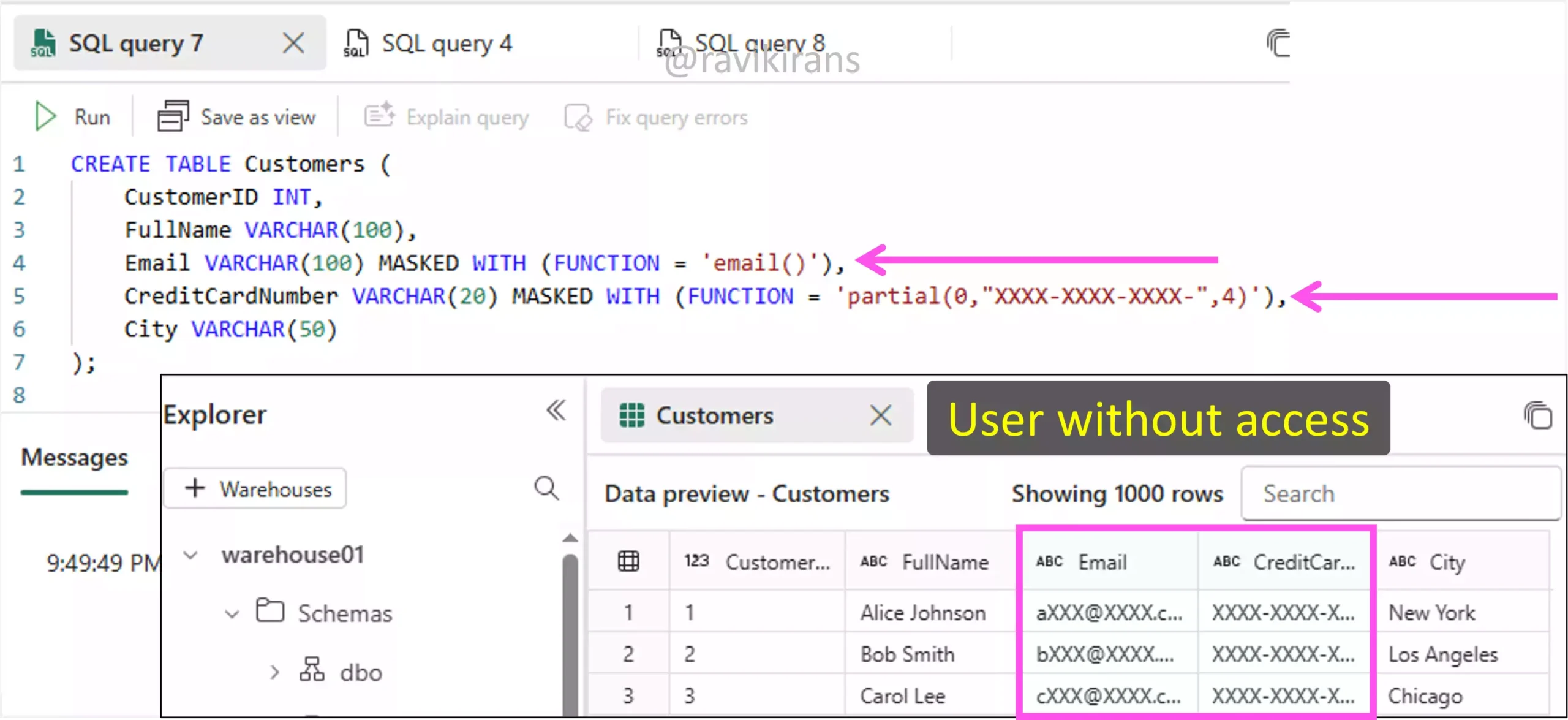

Options C and D are the correct answer choices. If you define masking rules while creating a table using the MASKED WITH clause, users without appropriate permissions will see the column with masked data.

This is an example of implementing dynamic data masking.

16] Your company uses a lakehouse architecture with Microsoft Fabric for analytics. Data is ingested and transformed using Microsoft Data Factory pipelines and stored in Delta tables.

You need to enhance data transformation efficiency and reduce loading time.

What should you do?

A. Configure Spark pool with more worker nodes.

B. Enable Delta Lake optimization.

C. Switch to a different Spark runtime version.

D. Use session tags to reuse Spark sessions.

Due to the unavailability of more information, the answer to this question is very divided.

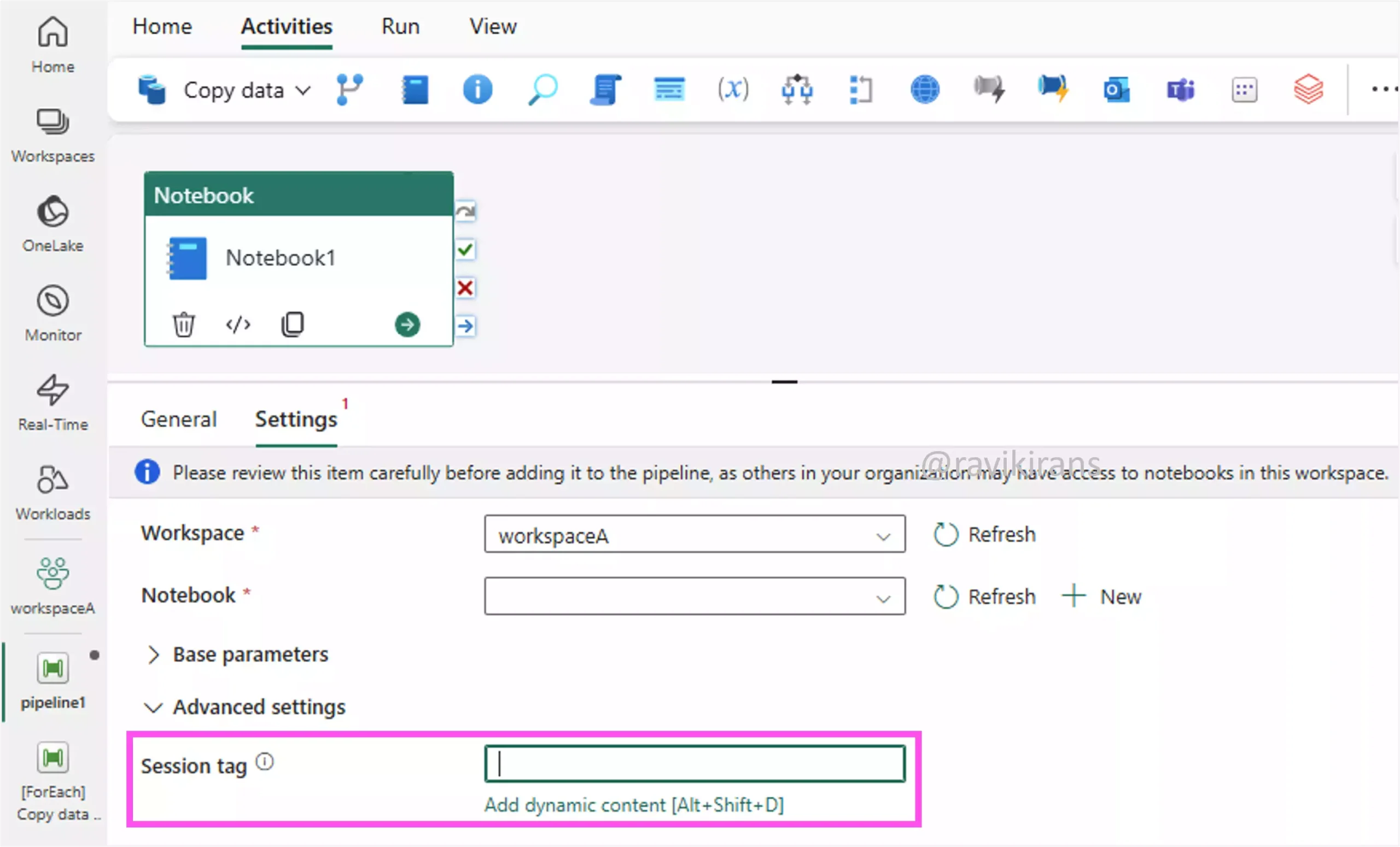

Option D can be a correct answer, as session tags in a Fabric pipeline notebook enable efficiency by sharing Spark sessions, thereby avoiding a cold start. But no information is given on whether data transformation is done using notebooks.

Further, session tags are useful only if multiple small notebooks are run in a pipeline, as they can reduce the cumulative startup time. But if you have long-running, heavy Spark transformations, session tags will have minimal impact as the startup time is negligible compared to the processing time.

If the transformations are heavy, using more worker nodes helps with increased parallelism and speed, but it also increases the cost (option A).

So both options A and D are very close.

Delta Lake optimization includes features like Optimize (improved read performance), Z-Order (improved sorting performance), and Vacuum (optimizing storage), which are mostly about query performance and less about the data transformation process itself. They help after the data is loaded, not during data loads.

Reference Link: https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-table-maintenance#table-maintenance-operations

Option B is incorrect.

Option C might help if newer versions offer performance gains, but little is known about which Spark runtime version is used or will be switched to.

17] Your company implements a new data analytics solution using Microsoft Fabric.

You need to design an orchestration pattern that minimizes manual intervention and supports dynamic data loading into a lakehouse.

Which approach should you recommend?

A. Create individual pipelines for each data source.

B. Implement a Data Factory pipeline with parameters and dynamic expressions.

C. Use a single notebook for all ETL activities without parameters.

D. Use a static configuration for data loading.

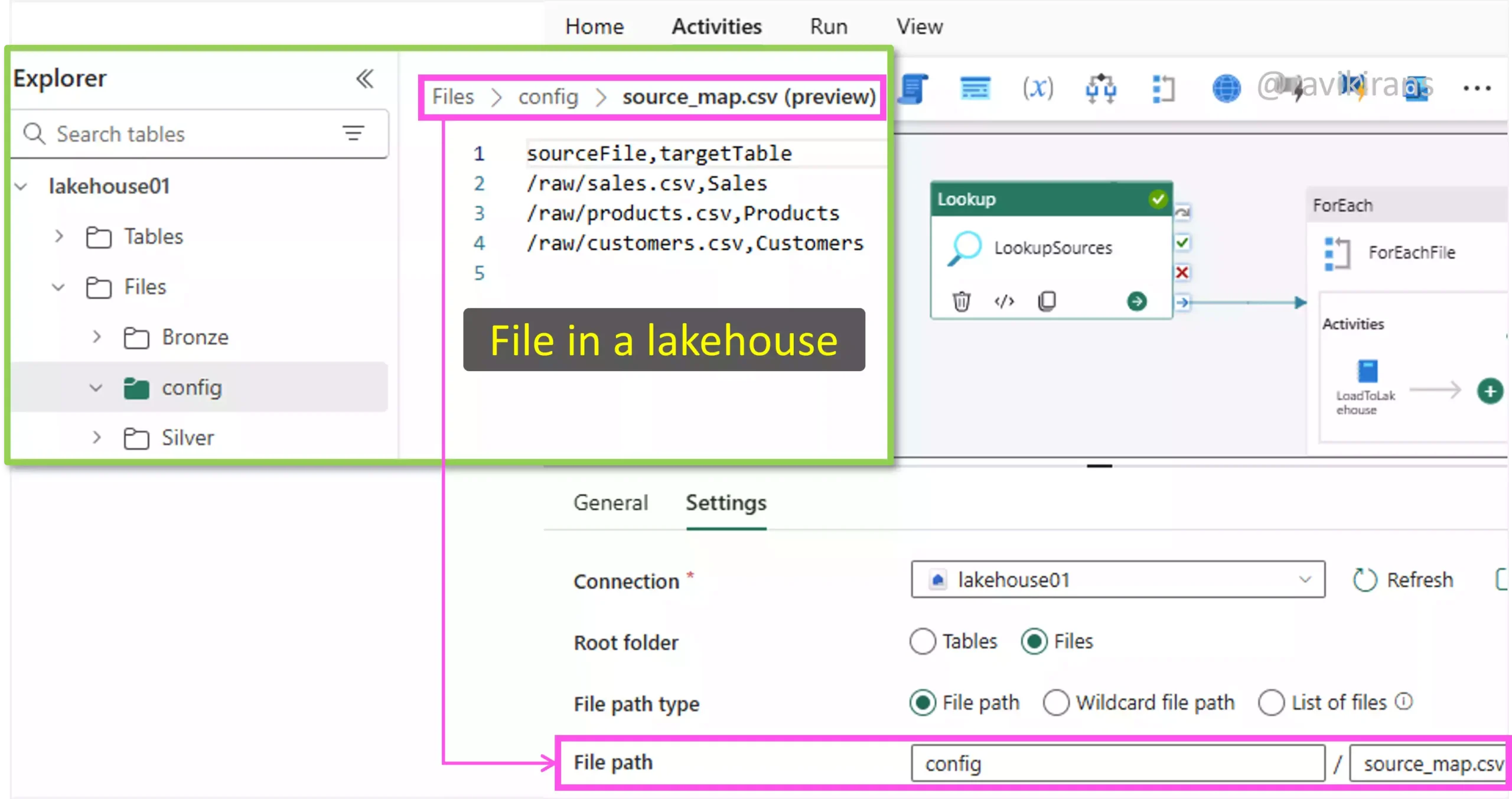

Dynamic data loading uses parameters to handle multiple sources without hard-coding, enabling a single pipeline to process various inputs.

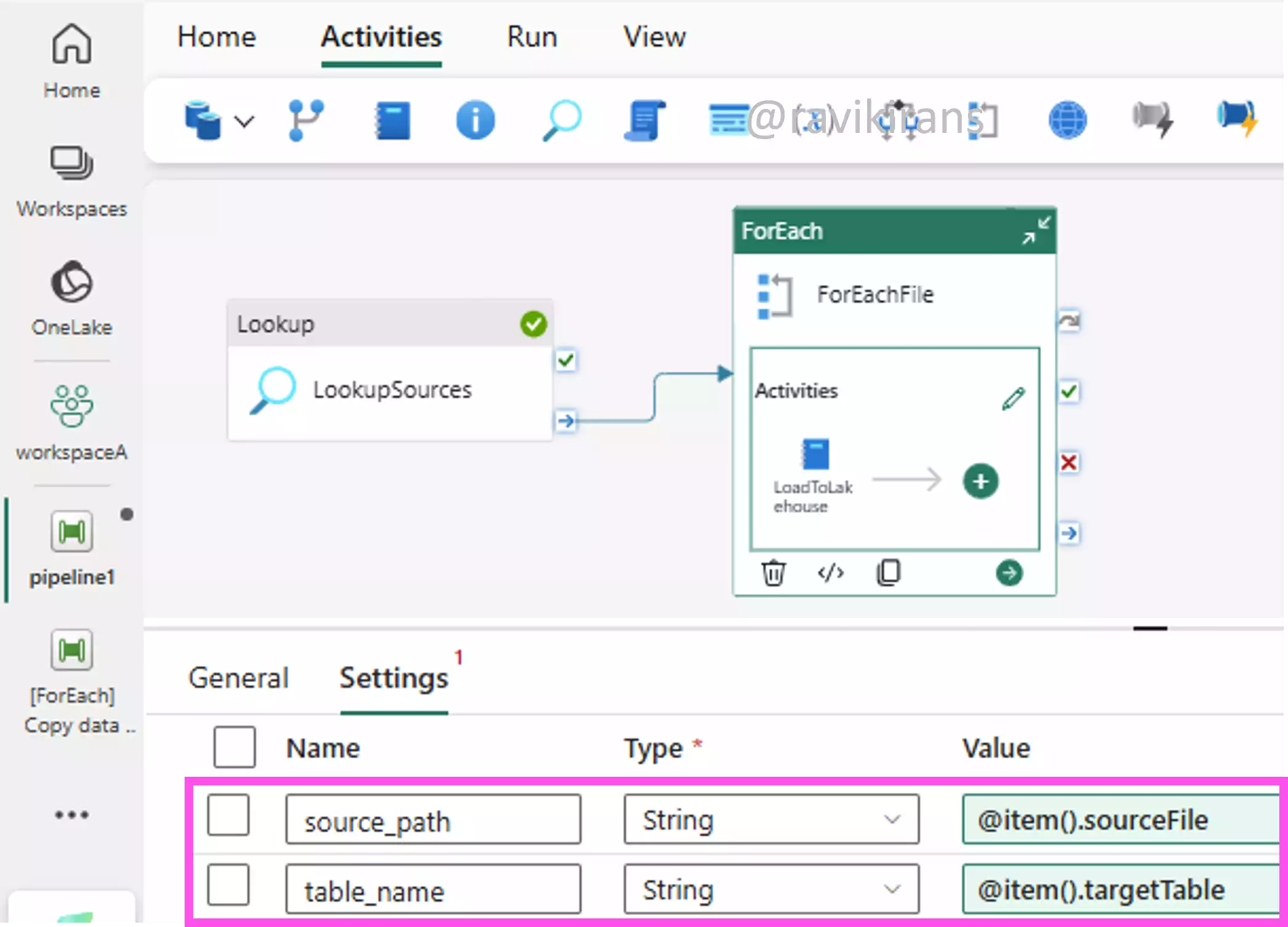

We can do this by creating a control file in a Lakehouse that has the source file and target table names. In the Fabric pipeline, a lookup activity references this file to load all the source and target names.

In the next step, using the ForEach activity, you can iterate and use parameters (source_path and table_name) and dynamic expressions (@item().sourceFile) to pick up the source and the target to be used within the ForEach activity.

This orchestration pattern minimizes manual intervention and supports loading data from multiple sources. Option B is the correct answer.

Option A is incorrect. If you do not use parameters, you have to create a pipeline for each source. This is manual work and not dynamic.

Option C is incorrect. If you do not use parameters, it is not dynamic loading. You’ll have to edit the notebook every time the data source changes.

Option D is incorrect. This option is not flexible and is rigid.

18] Your company is implementing a data warehouse solution using Microsoft Fabric. The data engineering team needs to load data from multiple CSV files stored in Azure Blob Storage into the warehouse.

You need to efficiently import data from these CSV files into the warehouse using T-SQL.

Each correct answer presents part of the solution. Which three actions should you take?

A. Specify FIELDTERMINATOR in the COPY statement.

B. Use a dataflow to load CSV files.

C. Use a SAS token for Azure Blob Storage access.

D. Use BULK INSERT statement to load CSV data.

E. Use COPY statement with wildcard for file path.

F. Use INSERT…SELECT for loading CSV data.

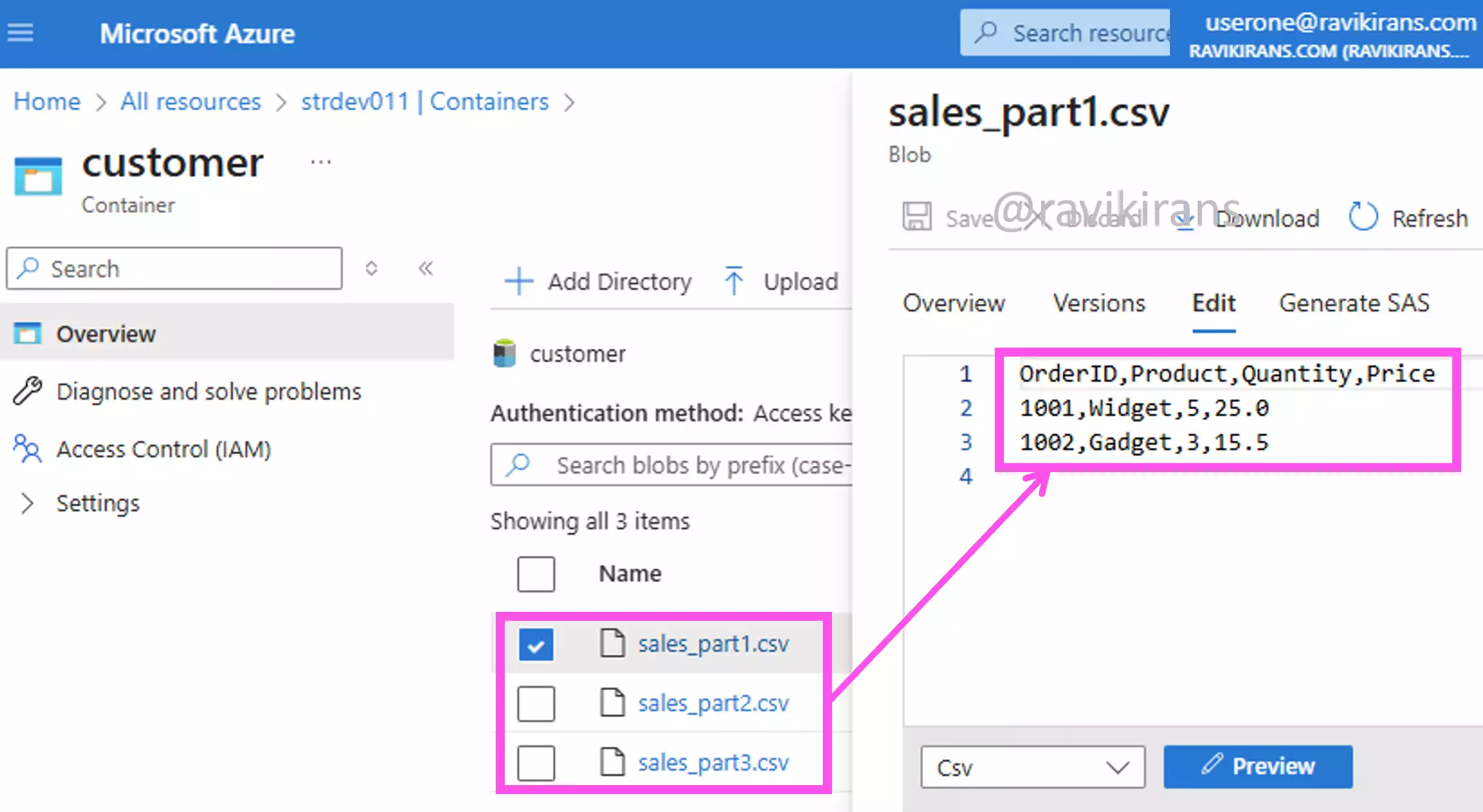

To replicate this scenario, I have uploaded three CSV files to an Azure Storage account, which all have the same schema.

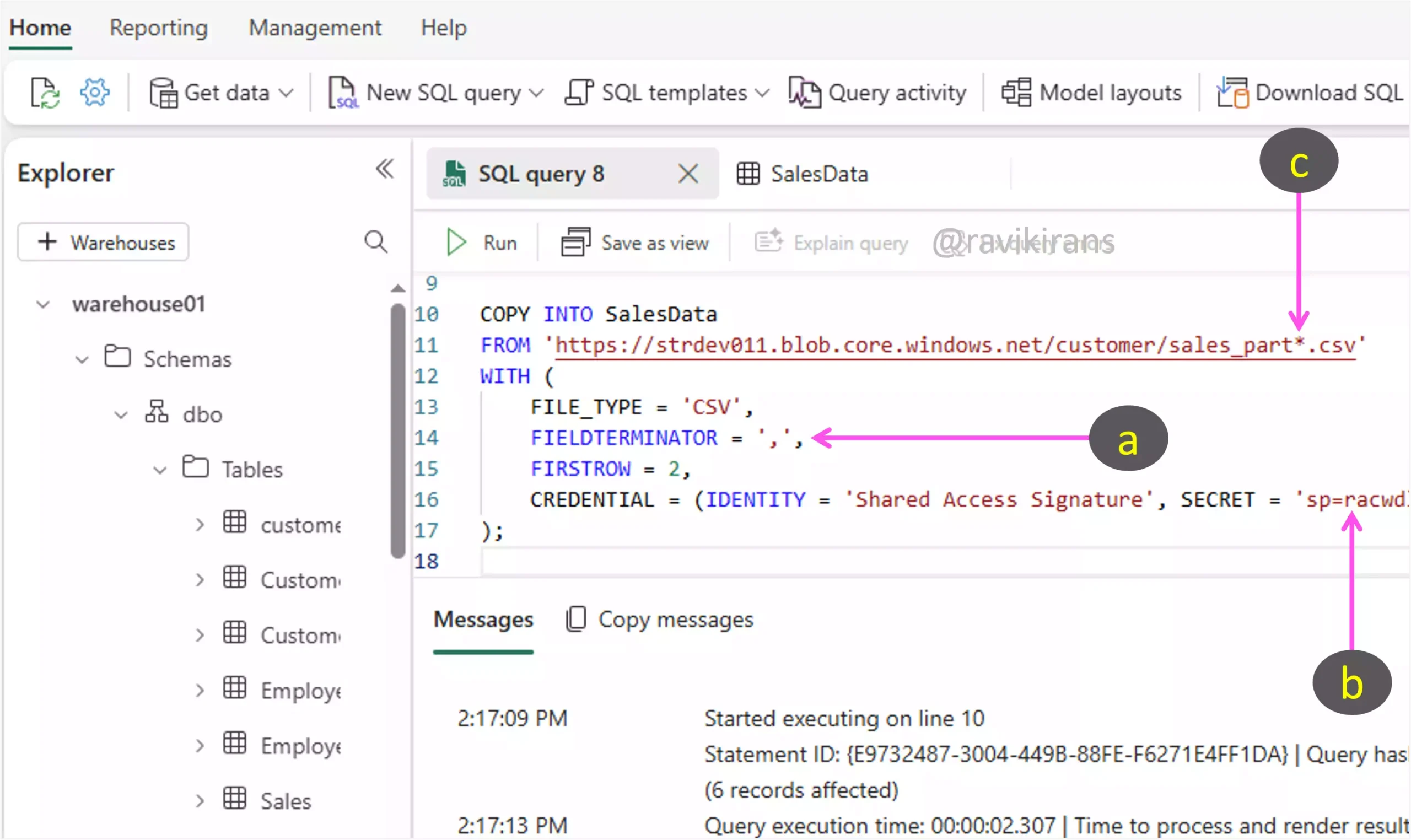

To import data from these CSV files into the warehouse using T-SQL, I will use the COPY INTO T-SQL command. In this command, I will specify the following:

a. A FIELDTERMINATOR ensures correct parsing of columns based on the delimiter (e.g., comma for CSV).

b. Use SAS tokens in the CREDENTIAL clause to access your CSV files in Blob Storage.

c. Using a wildcard for file path allows loading multiple files at once

So, options A, C, and E are the correct answer choices.

Option B is incorrect. We need to use T-SQL, not dataflows.

Option D is incorrect. BULK INSERT is equivalent to using the COPY statement. Options A and E indicate that we need to use the COPY statement.

Option F is incorrect. Similar reasoning goes for INSERT…SELECT. Further, it doesn’t import CSV files from Blob storage.

19] Your organization uses Microsoft Fabric for managing data pipelines in a large-scale ETL process. The current setup involves multiple pipelines triggered by specific events and schedules, facing challenges with hard-coded values.

You need to implement dynamic parameterization to enhance flexibility and reduce maintenance.

Each correct answer presents part of the solution. Which two actions should you perform?

A. Hard-code values in the configuration.

B. Pass external values using pipeline parameters.

C. Use dynamic expressions for runtime evaluation.

D. Use static triggers for pipeline execution.

E. Use static values in expressions.

Option A is incorrect. You want to avoid hard-coded values.

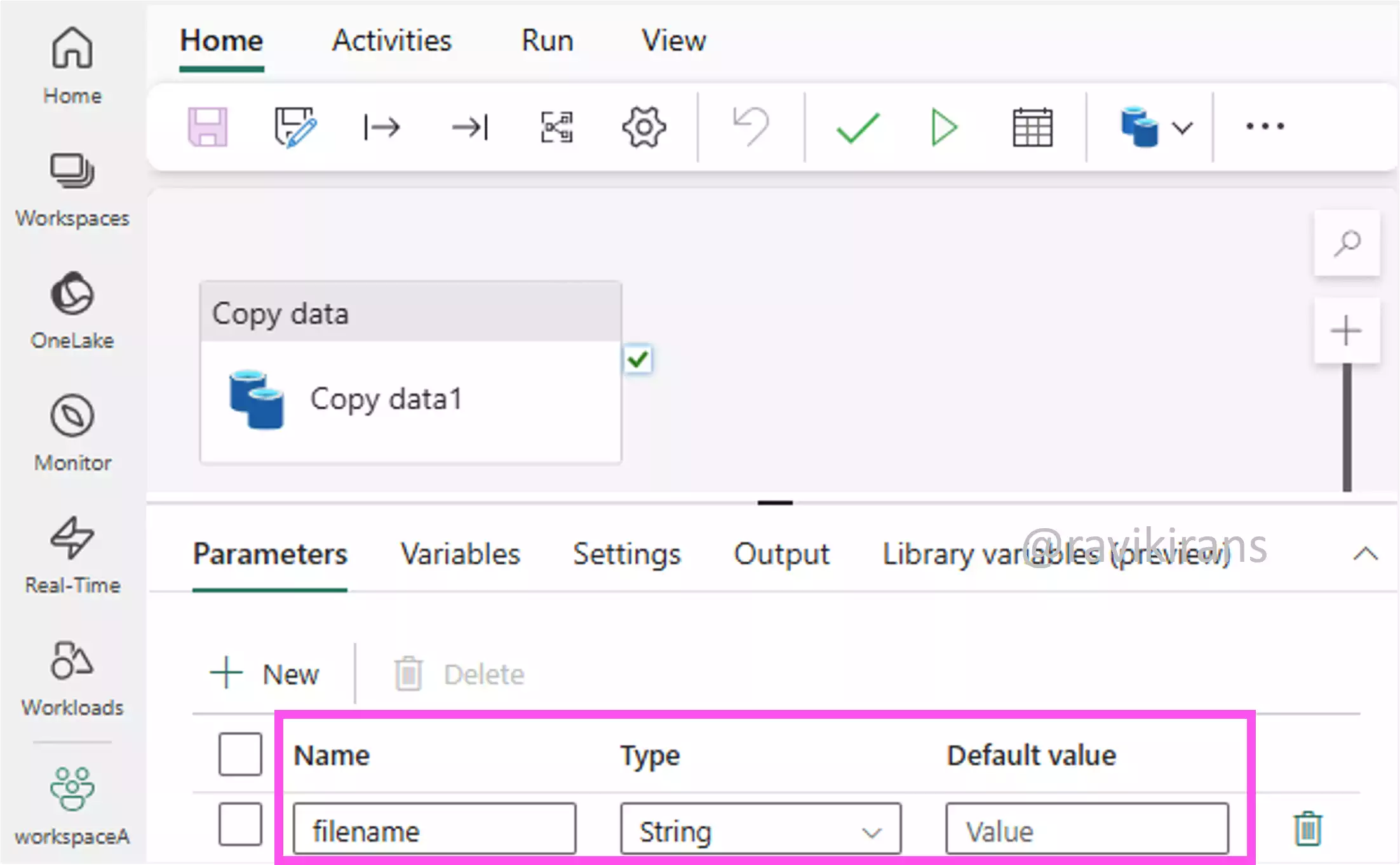

This question is similar to question 17, where we implement dynamic loading using pipeline parameters and dynamic expressions. For example, define the parameter filename for the pipeline.

Then, use the pipeline parameter as a dynamic expression in the file path of the copy data activity.

While triggering the pipeline via another pipeline or an event, pass the parameter value, for example, filename = 'revenue_data_2025_06'

Options B and C are the correct answer choices.

Options D and E are incorrect as they don’t help with dynamic parameterization.

20] Your organization uses Microsoft Fabric for managing data pipelines in a large-scale ETL process. The setup involves multiple pipelines that must handle different datasets dynamically, reducing hardcoding and improving maintainability.

You need to reuse pipeline components with varying inputs during execution.

Each correct answer presents part of the solution. Which two actions should you perform?

A. Hardcode dataset paths.

B. Pass external values as parameters.

C. Use fixed dataset configurations.

D. Use string interpolation.

String interpolation is the embedding of variables or expressions within a string so that the final output dynamically reflects their values. If you consider this definition, it becomes clear that string interpolation is similar to dynamic expressions.

With this knowledge, it becomes clear that this question is very similar to the previous question. The two actions we need to take to handle different datasets dynamically are to pass external values as parameters and use string interpolation (dynamic expression).

Options B and D are the correct answer choices (Refer to the previous question on product visual guidance).

Options A and C oppose the goal of dynamic, reusable pipelines.

Check out my DP-700 practice tests.

Follow Me to Receive Updates on the DP-700 Exam

Want to be notified as soon as I post? Subscribe to the RSS feed / leave your email address in the subscribe section. Share the article on your social networks with the links below so it can benefit others.