AZ-204 Preparation Details

Preparing for the AZ-204 Developing Solutions for Microsoft Azure exam? Don’t know where to start? This post is the AZ-204 Certificate Study Guide (with links to each exam objective).

I have curated a list of articles from Microsoft documentation for each objective of the AZ-204 exam. Please share the post within your circles so it helps them to prepare for the exam.

Exam Voucher for AZ-204 with 1 Retake

Get 40% OFF with the combo

AZ-204 Developing Solutions for Azure Course

| Skylines Academy | NEW!: Microsoft Azure Developer Certification |

| Pluralsight | Developing Solutions for Microsoft Azure |

| Udemy | Developer Exam Prep for Azure Certification |

AZ-204 Azure Developer Practice Tests & Labs

| Whizlabs Exam Questions | 165 Practice questions & [Online Course] |

| Udemy Practice Tests | Developing Solutions for Azure Practice Test |

Azure Developer Learning Materials (AZ-204)

| Udacity (Nanodegree) | Become a Developer for Microsoft Azure |

| Amazon e-book (PDF) | Azure Developer Exam Reference Book |

| Coursera (specialization) | Azure Developer Associate Test prep |

Looking for AZ-204 Dumps? Read This!

Using az-204 exam dumps can get you permanently banned from taking any future Microsoft certificate exam. Read the FAQ page for more information. However, I strongly suggest you validate your understanding with practice questions.

Check out all the other Azure certificate study guides

Full Disclosure: Some of the links in this post are affiliate links. I receive a commission when you purchase through them.

Develop Azure Compute Solutions (25-30%)

Implement IaaS solutions

Provision virtual machines (VMs)

Create a Windows VM in Azure with PowerShell

Configure, validate, and deploy ARM templates

Create & deploy ARM templates by using the Azure portal

Configure container images for solutions

Create a container image for deployment to Azure Container Instances

Test your knowledge on Azure Compute Solutions

Q] You have to deploy a microservice-based application to the Azure Kubernetes cluster. The solution has the following requirements:

- Reverse proxy capabilities

- The ability to configure traffic routing, and,

- Termination of TLS with a custom certificate

Which of the following would you use to implement a single public IP endpoint to route traffic to multiple microservices?

- Helm

- Brigade

- Kubectl

- Ingress Controller

- Virtual Kubelet

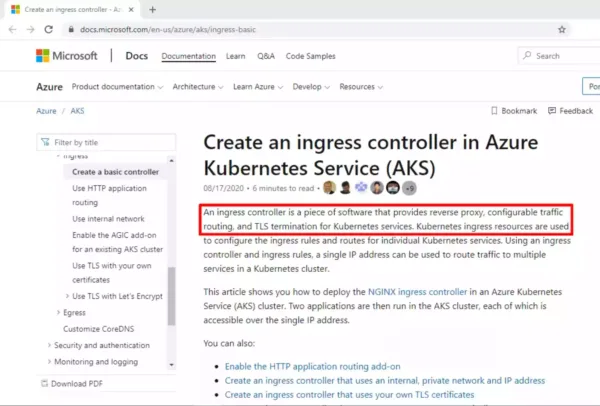

Explanation: First, you need to know that Ingress is an object that manages external access to services in a cluster.

One of the main reasons to use an ingress controller is that by using a single IP address, you can route traffic to multiple services in a Kubernetes cluster.

The documentation mentions that an ingress controller is a piece of software that provides reverse proxy, configurable traffic routing, and TLS termination for Kubernetes services, all of which are a requirement in the question.

So, option 1 is the correct choice.

Reference Link: Create an ingress controller

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Publish an image to the Azure Container Registry

Push image to Azure Container Registry

Run containers by using Azure Container Instance

Deploy a container application to Azure Container Instances

Create Azure App Service Web Apps

Create an Azure App Service Web App

Create an ASP.NET Core web app in Azure

Test your knowledge on Azure App Service

Q] A company has a web application deployed using the Azure Web App service. The current service plan being used is D1.

It needs to ensure that the application infrastructure can automatically scale when the CPU load reaches 85%. You also have to ensure costs are minimized.

Which of the following steps would you implement to achieve the requirements? Choose 4 answers from the options given below:

- Enable autoscaling on the Web application.

- Configure a scale condition.

- Configure the web application to use the Standard App Service Plan.

- Configure the web application to use the Premium App Service Plan.

- Add a scale rule.

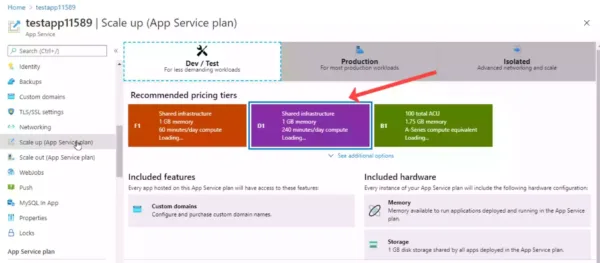

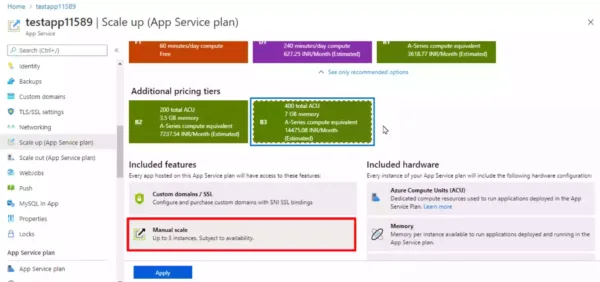

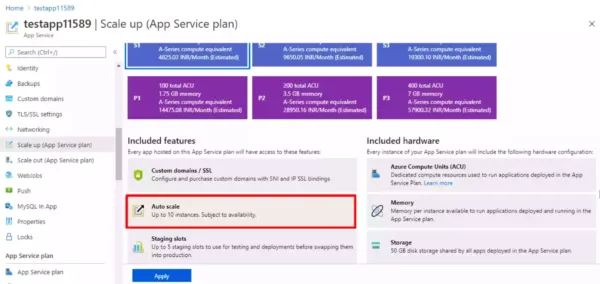

Explanation: Well, the app service plan D1 is only for the shared infrastructure and so contains only a limited number of features. For the D1 plan, autoscaling is not supported. So, the application cannot scale automatically when the CPU load reaches 85%.

To enable autoscaling, you need to upgrade the app service plan tier. Plans like B1, B2, and B3 enable you to scale manually but not automatically.

Autoscaling is only available for the standard and the premium plans.

So, these are the following steps to implement:

- First, we need to configure the web application to use the standard app service plan. Note that the premium app service plan works too, but since we need to ensure that costs are minimized too, option 4 is incorrect.

- And, since we need to select a total of 4 options, all the other 3 options must be selected to achieve the requirement.

Reference Link: App Service plans overview

Scale-up an app in App Service

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Enable diagnostics logging

Enable diagnostics logging for apps in Azure App Service

Deploy code to a web app

Deploy your app to Azure App Service with a ZIP or WAR file

Configure web app settings including SSL, API, and connection strings

Test your knowledge on Azure App Service

Q] You deploy a web application to Azure with App Service on Linux. You then publish a custom docker image to the Azure Web App.

Suppose you need to access the console logs generated from the container in real-time. Which of the following would go into Slot1 in this Azure CLI script?

az webapp log Slot1 --name testapp --resource-group test-rg Slot2 filesystem

az Slot3 log Slot4 --name testapp --resource-group test-rg

- config

- download

- show

- tail

Explanation: To configure logging, we use the command az webapp log config. Option 1 is the correct choice.

You can easily ignore the other options as it is evident from their names that:

download downloads a web app’s Log history as a zip file,

show gets the details of a web app’s logging configuration and,

tail starts live log tracing for a web app.

Reference Link: az webapp log config

This question is part of the free Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Implement autoscaling rules, including scheduled autoscaling, and autoscaling by operational or system metrics

Azure App Service Autoscaling rules

Scheduled Autoscaling & scaling by operational or system metrics

Also, review the common autoscale patterns

Implement Azure Functions

Create and deploy Azure Functions apps

Deploy a function app to Azure Functions

Implement input and output bindings for a function

Azure Functions triggers and bindings concepts

Test your knowledge on Azure Function Bindings

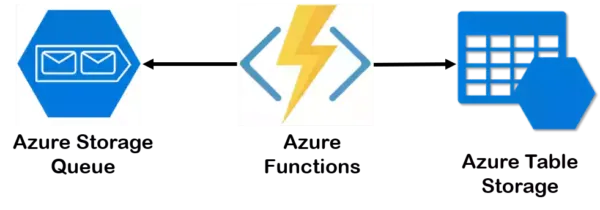

Q] You develop an Azure Function that performs the following activities:

- Read messages from an Azure Storage Queue, and,

- Process the messages and add entities to Azure Table Storage

You have to define the correct bindings in this function.json file.

{

"bindings": [

{

"type": "queueTrigger",

"direction": slot1 ,

"name": "my-orders",

"queueName": "queue-items",

"connection": "STORAGE_APP_SETTING"

},

{

"type": "table",

"direction": slot2 ,

"name": slot3 ,

"tableName": "out-table",

"connection": "TABLE_APP_SETTING"

}

]

}

Which of the following would go into Slot 1?

- “in”

- “out”

- “trigger”

- “$return”

Explanation: Since we need to read messages from the Azure Storage Queue, an Azure Queue Storage trigger is defined as the first element in the binding array.

And since we need to process and add entities to Azure table storage, the second element in the array defines the Azure Table Storage output binding.

So, the binding direction will be out (slot 2) for the 2nd item in the array since it is an output binding. And the binding direction for triggers (slot 1) is always in.

Option 1 is the correct choice.

Reference Link: Azure Functions trigger and binding

Binding direction in Azure Functions

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Implement function triggers by using data operations, timers, and webhooks

Timer trigger for Azure Functions

Create an Azure function triggered by a webhook

Implement Azure Durable Functions

Create Durable Functions using the Azure portal

Implement custom handlers

Azure Functions custom handlers

Develop for Azure Storage (15-20%)

Develop Solutions That Use Cosmos DB Storage

Select the appropriate API and SDK for a solution

Choose the appropriate API for Azure Cosmos DB

Implement partitioning schemes and partition keys

Model & partition data on Azure Cosmos DB using a real-world example

Partitioning and horizontal scaling in Azure Cosmos DB

Deep Dive into Azure Cosmos Partition Keys

Perform operations on data and Cosmos DB containers

Insert and query data in your Azure Cosmos DB database

Set the appropriate consistency level for operations

Choose the right consistency level

Manage change feed notifications

Change feed in Azure Cosmos DB

Change Feed – Unsung hero of Azure Cosmos DB

Develop Solutions That Use Blob Storage

Move items in blob storage between storage accounts or containers

Moving Azure storage blobs using CLI

Test your knowledge on Azure Storage Account

Q] You have to implement the azcopy tool to copy objects from a local folder named ‘Contoso’ in the ‘D’ drive to a container named ‘demo’ in a storage account.

And, the command azcopy is given which copies all the objects in the local folder.

azcopy copy slot1 slot2/?sv=2018-03-28&

amp;ss=bjqt&srt=sco&sp=rwddgcup&se=

2019-05-01T05:01:17Z&st=2019-04-30T

21:01:17Z&spr=https&sig=MGCXiyEzbtttk

r3ewJIh2AR8KrghSy1DGM9ovN734bQF4%

3D" --recursive=false

Which of the following would go into Slot2?

- contoso.blob.core.windows.net/demo

- contoso.blob.windows.net/demo

- D:\contoso

- contoso

Explanation: To copy objects with the azcopy tool, use the azcopy command with the copy keyword. Then comes the local directory from where the objects need to be copied. Next comes the destination URL of the target container in the storage account.

Clearly options 3 and 4 are incorrect. Option a is the correct answer as the correct URL for a storage account will be in the format <name>.blob.core.windows.net. And, demo is the name of the container.

The characters that you see after the container name are for the SAS token.

Reference Link: Using a SAS token

This question is part of the free Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Set and retrieve properties and metadata

Setting properties & metadata during the import process

Perform operations on data by using the appropriate SDK

Azure Blob storage client library v12 for .NET

Implement storage policies, and data archiving and retention

Manage immutability policies for Blob storage

Amazon link (affiliate)

Implement Azure Security (20-25%)

Implement User Authentication and Authorization

Authenticate and authorize users by using the Microsoft Identity platform

Authentication with the Microsoft identity platform endpoint

Microsoft identity platform & OAuth 2.0 authorization code flow

Authenticate and authorize users and apps by using Azure Active Directory

Test your knowledge on Azure Active Directory

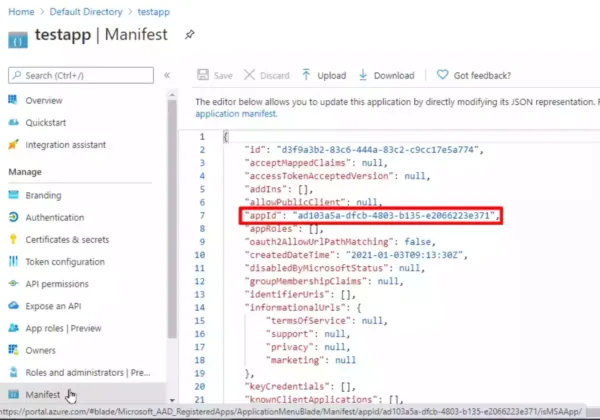

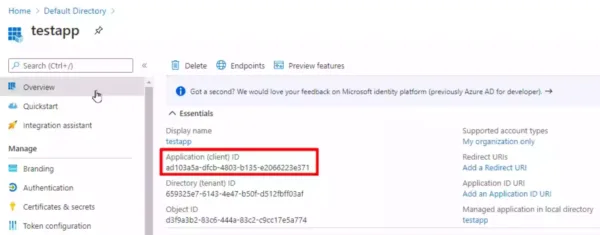

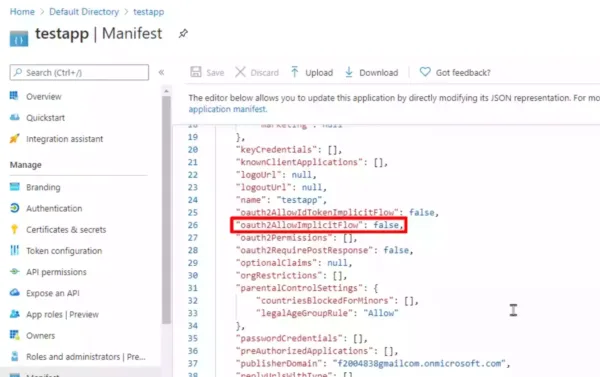

Q] You build a web application that’s deployed to Azure. The application would not allow anonymous access and the authentication would be carried out via Azure AD. Further, the application needs to abide by the following requirements:

- Users must be able to log into the web application using their Azure AD credentials

- The personalization of the web application must be based on the membership in Active Directory groups

{

'appId' : 'ad1403a-dfb-48d03-b15-e622s3le31'

slot1 : 'all'

slot2 : true

}

You have to configure the application manifest file. Which of the following would go into Slot2?

- allowPublicClient

- oauth2Permissions

- requiredResourceAccess

- oauth2AllowImplicitFlow

Explanation: First, see the below application manifest file which sometimes you would edit to configure the attributes of an application in Azure Active Directory.

All the applications contain something called appID which is nothing but the application ID or the Client ID.

From the question, it is evident that the attribute (in slot2) will have a value of type Boolean.

Now, to meet the requirements in the question, you would set the value of the attribute oauth2AllowImplicitFlow to True.

This attribute specifies whether a web app can request OAuth2.0 implicit flow access tokens for browser-based apps, like single-page apps.

So, option 4 perfectly fits what’s asked in the question.

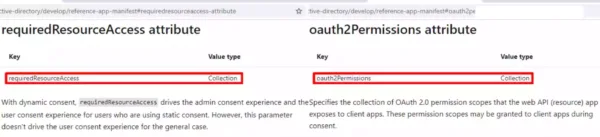

Options oauth2Permissions and requiredResourceAccess represent a value of type Collections so you can easily ignore them.

Reference Link: Azure Active Directory app manifest

requiredResourceAccess attribute

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Create and implement shared access signatures

Grant limited access to Azure Storage resources using SAS

Implement Secure Cloud Solutions

Secure app configuration data by using App Configuration Azure Key Vault

Use Key Vault references in an ASP.NET Core app

Centralized app configuration and security

Develop code that uses keys, secrets, and certificates stored in Azure Key Vault

Keys

Set and retrieve a key from Azure Key Vault using PowerShell

Azure Key Vault key client library for .NET (SDK v4)

Secrets

Set and retrieve a secret from Key Vault using PowerShell

Azure Key Vault secret client library for .NET (SDK v4)

Certificates

Set and retrieve a certificate from Azure Key Vault

Azure Key Vault certificate client library for .NET (SDK v4)

Implement solutions that interact with Microsoft Graph

Test your knowledge of Microsoft Graph API

Q] You develop an ASP.Net Core application that works with blobs in an Azure storage account. The application authenticates via Azure AD credentials.

Role-based access has been implemented on the containers that contain the blobs. These roles have been assigned to the users.

You have to configure the application so that the user’s permissions can be used with the Azure Blob containers.

Which of the following would you use as the Permission for the Microsoft Graph API?

- User.Read

- User.Write

- client_id

- user_impersonation

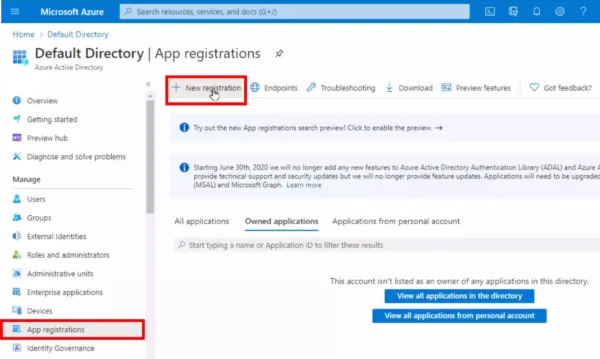

Explanation: First, log in to the Azure portal and create a new app registration in the Azure Active Directory for the ASP.Net Core application.

Click ‘App registrations’ and then click ‘New registration.’ Enter a name for your app and then click ‘Register’ to create the app.

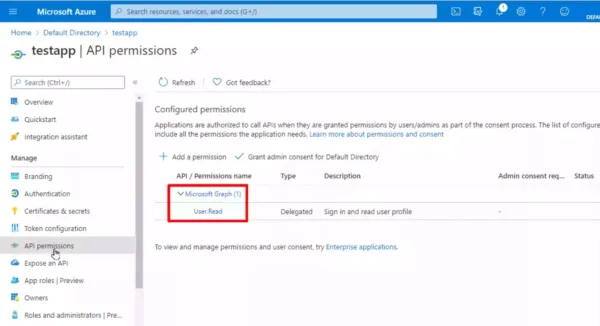

If you check the API permissions for the app, by default, the registered Azure AD application has access to the Microsoft Graph API.

And the permission used by the app for accessing Microsoft Graph API is User.Read. So, option 1 is the right choice.

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Monitor, Troubleshoot, and Optimize Azure Solutions (15-20%)

Integrate Caching and Content Delivery within Solutions

Configure cache and expiration policies

Configure cache and expiration policy for Azure CDNs

Configure cache and expiration policies for Azure Redis Cache

Setting an expiration value on keys

How to configure Azure Cache for Redis

Implement secure and optimized application cache patterns including data sizing, connections, encryption, and expiration

Common cache patterns with Azure Redis Cache

Instrument Solutions to Support Monitoring and Logging

Configure an app or service to use Application Insights

Application Insights for ASP.NET Core applications

Configure Application Insights for your ASP.NET website

Application Insights for .NET console applications

Test your knowledge on Azure Application Insights

Q] A developer needs to enable the Application Insights Profiler for Azure Web App. Which of the following feature is a prerequisite to enable the Profiler?

- CORS configuration

- Always On setting

- Enable Identity

- Enable Custom domains

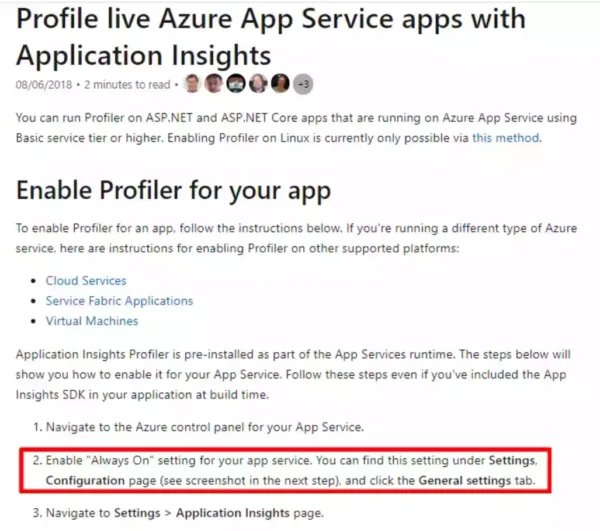

Explanation: Azure Application Insights Profiler provides performance traces for applications that are running in production in Azure.

Enabling Identity is part of Azure Active Directory and is not related to Application Insights Profiler.

Custom domains are a feature to access any Azure URI by a different or a customized URI. Hence this is not related to Application Insights Profiler.

Cross-origin resource sharing (or CORS) defines a way for web applications in 1 domain to interact with resources in another domain. CORS will not help in enabling Application Insights Profiler.

To enable Application Insights Profiler for a web App, the correct prerequisite is to enable ‘Always On.’

This is required because if a web app is idle for too long, Azure App Service unloads the website, and it only loads the website again when the traffic returns. By enabling ‘Always On’, you keep the Web App up and running all the time.

Reference Link: Profile live Azure App Service apps with Application Insights

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Analyze and troubleshoot solutions by using Azure Monitor

Troubleshoot solutions by using Azure Monitor

Troubleshoot an App Service app with Azure Monitor

Implement Application Insights web tests and alerts

Creating an Application Insights web test & alert programmatically

Connect to and Consume Azure services and Third-party Services (15-20%)

Implement API Management

Create an APIM instance

Creating Azure API Management instance using the Azure portal

Configure authentication for APIs

Secure APIs using client certificates in APIM

Define policies for APIs

How to set or edit Azure APIM policies

Develop Event-based Solutions

Implement solutions that use Azure Event Grid

Automate resizing uploaded images using Event Grid

Implement solutions that use Azure Notification Hubs

Send notifications to UWP apps using Azure Notification Hubs

Implement solutions that use Azure Event Hub

Visualize data anomalies in real-time events in Azure Event Hubs

Test your knowledge on Azure Event Hubs

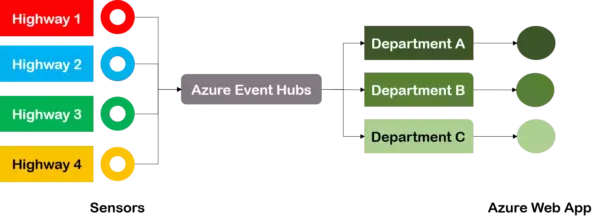

Q] A company is building a traffic monitoring system. The system monitors the traffic along 4 highways and is responsible for producing a time series-based report for each highway.

The traffic sensors on each highway have been configured to send their data to Azure Event Hubs. The data from Event Hubs is then consumed by three departments. Each department makes use of an Azure Web App to display the data.

You have to implement the Azure Event Hub instance and ensure that data throughput is maximized and latency is minimized.

Which of the following would you use as the partition key?

- Highway

- Department

- Timestamp

- Datestamp

Explanation: The scenario in the question is best understood with the help of the diagram below:

Since the data comes in for each highway, the highway represented by probably a highway number would be ideal for the partition key.

The other options are incorrect since they would not provide ideal values for the distribution of data across the partitions.

Reference Link: Partitions in Event Hub

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Import OpenAPI definitions

Import an OpenAPI specification

Import and publish your first API

API import restrictions & known issues

Develop Message-based Solutions

Implement solutions that use Azure Service Bus

Azure PowerShell to create a Service Bus namespace & a queue

Test your knowledge of Azure Service Bus

Q] You develop an application that makes use of Azure Service Bus. You create filters based on different types of subscribers that would subscribe to the topic.

Which of the following would you use as the filter condition for the requirement?

“Subscribers should be able to receive all messages being sent to the topic”

- Boolean filters

- Primary filters

- SQL filters

- Correlation filters

Explanation: Well, Azure Service Bus supports 3 different filter conditions: They are:

- Boolean filters

- SQL filters, and,

- Correlation filters.

Correlation filters have a set of conditions that are matched against the arriving messages.

SQL filter holds a SQL-like conditional expression that’s evaluated against the incoming messages.

Boolean filters cause either all or none of the arriving messages to be selected for the subscription.

Since the question mentions that we need to receive all messages sent to the topic, a Boolean filter would be more suitable.

Option 1 is the correct choice

Reference Link: Azure Service Bus topic filters

This question is part of the free AZ-204 Whizlabs practice test. My detailed explanation (with demo) is given on my YouTube channel as well!

Implement solutions that use Azure Queue Storage queues

Work with Azure storage queues in .NET

This brings us to the end of the AZ-204 Developing Solutions For Microsoft Azure Study Guide.

What do you think? Let me know in the comments section if I have missed out on anything. Also, I love to hear from you about how your preparation is going on!

In case you are preparing for other Azure certification exams, check out the Azure study guide for those exams.

Follow Me to Receive Updates on AZ-204 Exam

Want to be notified as soon as I post? Subscribe to the RSS feed / leave your email address in the subscribe section. Share the article to your social networks with the below links so it can benefit others.

49 Comments

Hi, can you provide links for practice test

Hi Ravi sir,

Thank you for writing such an amazing blog. I have some additional queries

1) As a javascript developer, can I give this exam?

2) Can I host a MERN full-stack app on Azure?

Hi Ravikiran,

I am planning to write AZ 204 , I am preparing based on the links that you have mentioned.

COuld you please tell me if if they are asking labs currently , and should we learn all the commands in powershell that we use here . FOr example , VM creation, front door cache setting , and so on.

Kindly let me know .

Hi Ravi,

I am planning to write AZ204 . I have completed the AZ fundamentals certification. I would like to know if they ask us to write code during the exam. will there be labs? or what is the format of the exam ?

Hello Ranjitha,

You will be required to fill in the code blanks. No code required

Hi RaviKrian,

Thank you so much for detailed information.

I am looking for AZ-204 certification, I am a beginner for azure.

I am interested in Pluralsight learning, so when I am searching your link(Developing Solutions for Microsoft Azure (AZ-203)) for pulralsight I am getting 74 results.

So All 74, I need to follow right to get certified AAZ-204.

Kindly suggest me.

Hi Ravi,

My self Rakesh senior developer in Microsoft Technologies. I am looking for AZ-204 certification learning materials. Let me know the best approach to learn and do labs.

Hello Rakesh,

labs are available in GitHub, and for the course pick any from LinkedIn or Udemy

Verify understanding with PT, and get an Azure sub

Hi Ravi, thank you for this! do you have any books that you can recommend for this exam

It is a new exam, so I doubt there are any books out there

Thank you very much Ravi for this guide. Please if you aware, let me know whether AZ-204 exam includes lab or only multi choice questions.

Hello Jasmine, Generally they have labs, but these days labs are not tested.

Thank you Ravi, I have passed the exam successfully ?

Ravi, Jasmine,

I just started preparing for AZ 204 after completing AZ 900. New to cloud and experienced in Python.

However the MS learning path uses C# & Powershell throughout the course. Please let me know if the exam will be oriented towards any programming language/commands? Do we need to have in-depth C# or Powershell knowledge OR will a high level understanding of commands/scripts be sufficient.

TIA!

Hello Nikhil,

A high-level understanding of PowerShell commands is sufficient. There is a pattern to those PS commands that you can easily master.

Please check this documentation

https://docs.microsoft.com/en-us/powershell/azure/get-started-azureps?view=azps-4.5.0#find-commands

Hi Ravi, i passed AZ 204 today and really it was a tough one. Your pointers helped a looot 🙂 Being a QA and got thru this exam feels good 🙂

That’s awesome Kavitha. Congrats. What’s next on your learning journey?

Configure Just-in-time access to a VM: This does not seem to be part of AZ-204 or AZ-203, can you confirm?

VM for remote access is mentioned. I think they imply JIT

Is that book “Exam Ref AZ-203 Developing Solutions for Microsoft Azure” by Santiago Fernández Muñoz still useful when we are preparing for AZ-204? I read its contents, they are similar, and it gives some solid background in terms of concepts and understandings. Do you recommend we go through the above book to prepare for AZ-204? Thanks.

Yea, if you are a bookworm, that book will be a useful guide, but I doubt it is updated for az-204

It seems to be a very complete guide for those preparing for AZ-204 Exam, thanks for the efforts to compile all those information into one page. I am preparing for AZ-204, and will follow your links closely.

Hi Ravi,

Is whizlab or udemy considered braindump?

Hi Ravi,

I recently completed AZ-900 Fundamentals and looking forward to start preparing for AZ-204. And as i am from mainframe background with 10+ yrs exp, is it necessary to learn any other programming language ?

Well, you don’t have to…But trying laying your hands on Azure and developing some solutions with CLI, SDKs & other APIs before you appear for the exam

Hello Ravikiran, Thank you for details. I have 14 years of development exp but no Azure exp. Should going to through linkedin or pluralsight courses will be enough for me to pass the exam?

Hello Ravi,

I am a QA person with development knowledge and planning to write az 204 exam b this month end. i started following your blog and kicked off with preparation. Any other suggestions on d’s and dont’s will really help me please?

Awesome list of resources! Thank you for compiling this list. I am curious, do you know if the exam focuses more on Powershell vs Azure CLI commands?

Higher emphasis on PowerShell

Hi, Thanks alot for the details of the exam.

Question: I am preparing to take the Azure 204 exam. What process/Exam learning guides would you recommend out of the above?

Course – Pluralsight/LinkedIn

Practice Test – Whizlabs

Wow this is amazing! This will really save me a lot of time

Hello Ravikiran,

thanks for informative post. I have a question.

I heard that Microsoft removed hands-on-lab part from the AZ exams.

Is it still like that? Or lab section came back?

If there is a lab section in the exam, how can we study for it? I use generally Portal and I don’t know Azure-CLI. Should I memorize all the commands?

Thanks and regards.

Hi Mustafa,

It is like that currently. But it may change soon. The best thing is not to think about that and be prepared for everything.

You don’t need to memorize the commands. I suggest you start writing commands to create resources. After a while, you will easily figure out the pattern of those commands. That should be enough for the exam.

Hi Ravikiran,

Thank you a lot for your post, it is fantastic and very useful.

Are all question on Whizlabs genuine (i.e. no brain dumps)?

Thank you

Alberto

Thanks, Alberto.

Yea Whizlabs is not brain dumps

Hi Ravikiran,

Thank you for your answer,

Will AKS be part of the exam or will it only focus on Azure Container Instances?

Incidentally, I noticed in your links above there are references only on AKS?

Kind regards,

Alberto

Yea, AKS is not in objectives. Probably confused with az-203

Thanks for pointing out. I updated the exam resources

Thank you again, your article really saved me a lot of time.

Welcome

Hi, I want to do the AZ-203 or AZ-204 Exam. Can you please email me back with your contact so that i can touch base with you.

Please contact via the contact form