Welcome to the AZ-104 Official Practice Test – Part 4.

In this part, I have given my detailed explanations of the 10 official questions from Microsoft. Unlike on the Microsoft website, the explanations include screenshots to help you prepare for the AZ-104 exam.

That said, these tests are very simple, and they should only be used to brush up on the basics. The real exam would rarely be this easy. To get more rigorous practice and even in-depth knowledge, check out my practice tests (click below button).

Once done, check out the AZ-104 questions Part -5 and the accompanying AZ-104 Practice Test video.

Q31] You have an Azure subscription that contains a container app named App1. App1 is configured to use cached data.

You plan to create a new container.

You need to ensure that the new container automatically refreshes the cache used by App1.

Which type of container should you configure?

a. blob

b. init

c. privileged

d. sidecar

An Azure Container app can have multiple containers. The sidecar containers work in tandem with the main container providing additional functionality and services. For example, you can have a sidecar container that runs a background process to refresh a cache used by the primary app container in a shared volume.

A very similar requirement is given in the question. So, Option D is the correct answer.

Reference Link:https://learn.microsoft.com/en-us/azure/container-apps/containers#sidecar-containers

The init containers prepare the environment for the main container to start its execution. They handle tasks such as fetching data or performing one-time setup actions. Option B is incorrect.

Reference Link:https://learn.microsoft.com/en-us/azure/container-apps/containers#init-containers

Q32] You have an Azure subscription that contains a resource group named RG1. RG1 contains an application named App1 and a container app named containerapp1.

App1 is experiencing performance issues when attempting to add messages to the containerapp1 queue.

You need to create a job to perform an application resource cleanup when a new message is added to a queue.

Which command should you run?

a. az containerapp job create \ --name "my-job" --resource-group "RG1" -trigger-type "Event" \ -replica-timeout 60 --replica-retry-limit 1 ...

b. az containerapp job create \ --name "my-job" --resource-group " RG1" -trigger-type "Manual" \ -replica-timeout 60 --replica-retry-limit 1 ...

c. az containerapp job start \ --name "my-job" --resource-group " RG1" -trigger-type "Schedule" \ -replica-timeout 60 --replica-retry-limit 1 ...

d. az containerapp job start \ --name "my-job" --resource-group " RG1" -trigger-type "Event" \ -replica-timeout 60 --replica-retry-limit 1 ...

Since we need to create a job, not start a job, we can ignore options C and D.

Reference Link: https://learn.microsoft.com/en-us/azure/container-apps/jobs

There are three types of job triggers: Manual, Schedule, and Event. Since we need to create a job that performs a task when a new message is added to a queue, the job trigger type will be Event. Option A is the correct answer.

Reference Link: https://learn.microsoft.com/en-us/azure/container-apps/jobs

Q33] You have an Azure subscription that contains a web app named App1.

You configure App1 with a custom domain name of webapp1.contoso.com.

You need to create a DNS record for App1. The solution must ensure that App1 remains accessible if the IP address changes.

Which type of DNS record should you create?

a. A

b. CNAME

c. SOA

d. SRV

e. TXT

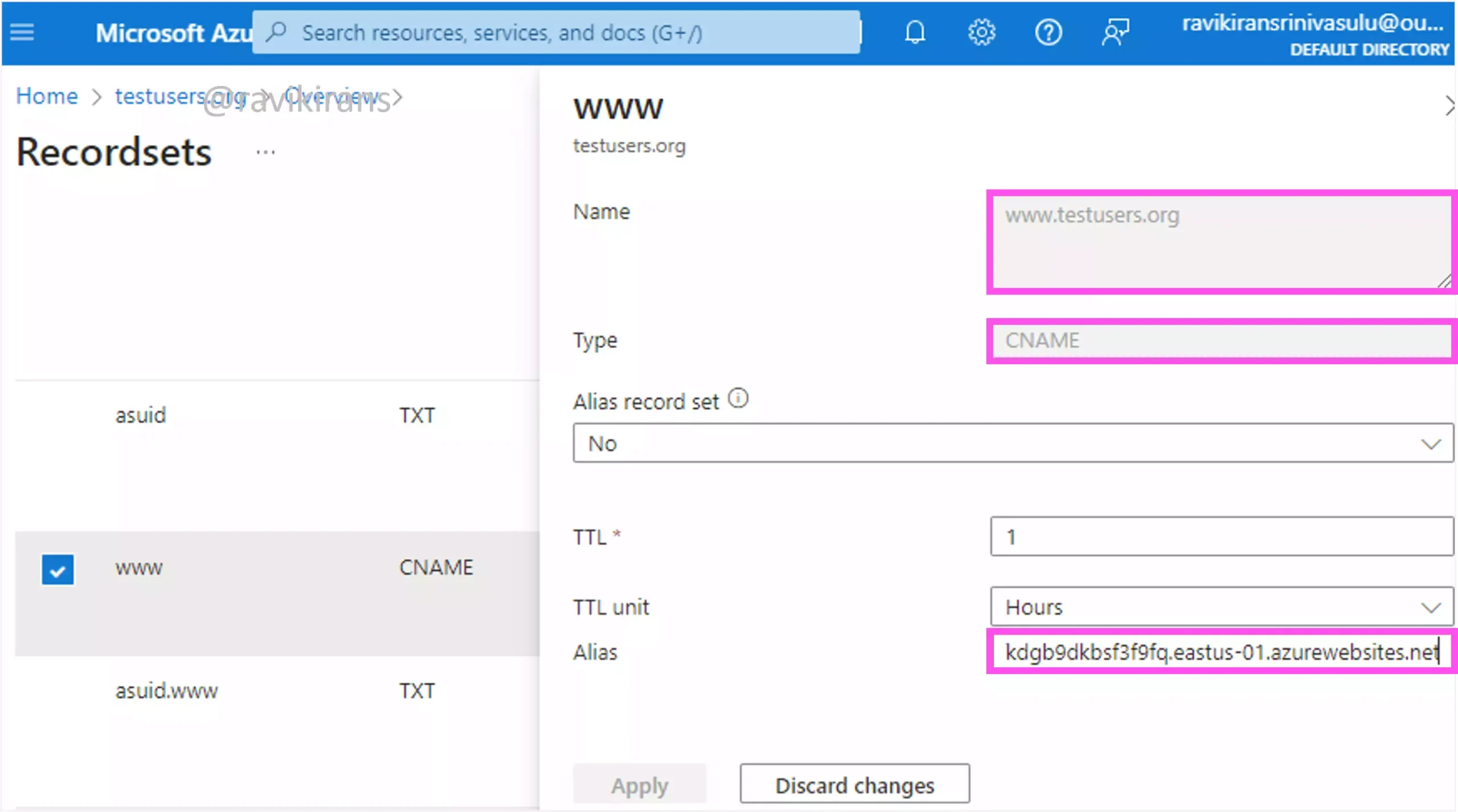

An A record maps a domain name to an IP address. A CNAME record maps a domain name to another domain name.

For web apps, you create a CNAME that maps the first domain name users see in the browser to the second domain name to look up the address. For example, here I mapped the custom domain www.testusers.org to the azurewebsites.net domain name provided by Azure App Service.

If the IP address change, only the A record needs to be updated. A CNAME is still valid. Option B is the correct answer.

Reference Link: https://learn.microsoft.com/en-us/training/modules/configure-azure-app-services/8-create-custom-domain-names

Q34] You need to generate the shared access signature (SAS) token required to authorize a request to a resource.

Which two parameters are required for the SAS token? Each correct answer presents part of the solution

a. SignedIP (sip)

b. SignedResourceTypes (srt)

c. `SignedServices (ss) `

d. SignedStart (st)

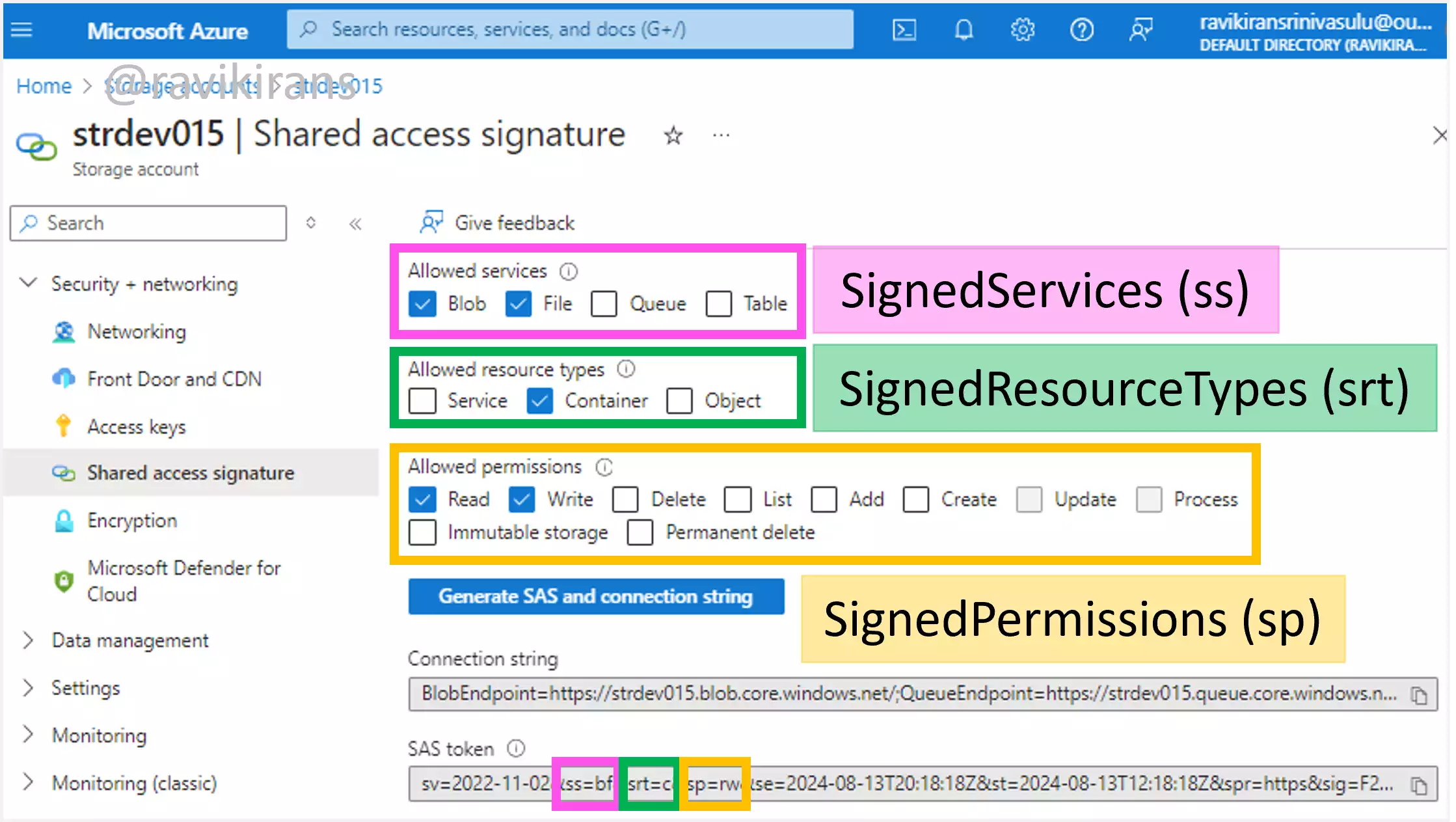

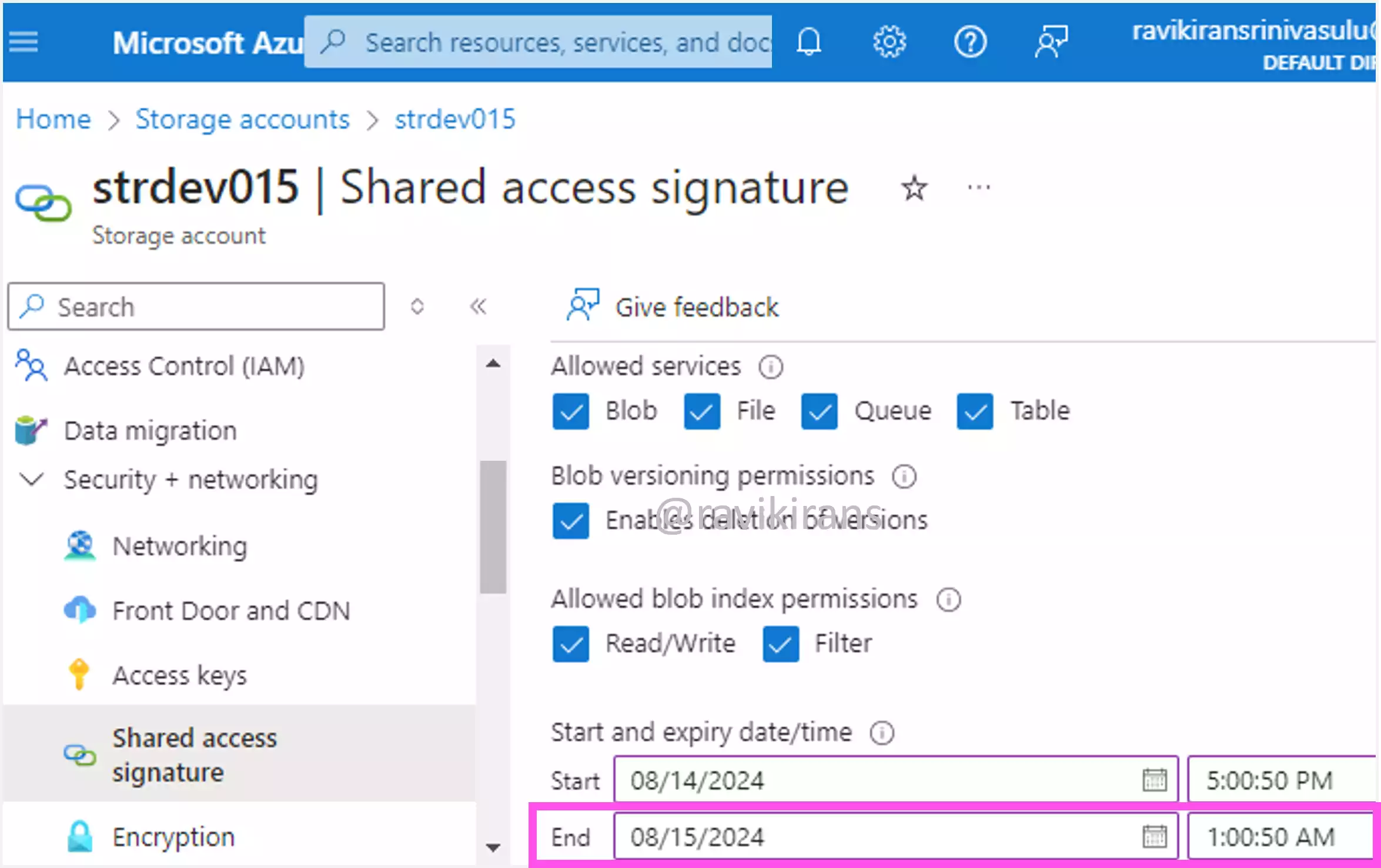

The SAS token that you generate has several required and optional parameters.

For example, the SignedServices (ss) is a required parameter that specifies the signed services that are accessible with the account SAS. Since we selected only Blob and File as Allowed services, the value of ss is bf.

The SignedResourceTypes (srt) is also a required parameter that specifies the resource types that are accessible with the account SAS. Since we selected only Container as the Allowed resource types, the value of srt is c.

The SignedPermissions (sp) is also a required parameter that specifies the permissions available with the SAS. Since we selected only Read and Write permissions, the value of sp is rw.

If you continue the analysis, you will realize that the SAS token is a mix of both required and optional parameters.

Of the given options, only SignedResourceTypes and SignedServices are required. SignedIP and SignedStart are optional parameters. Option B and C are the correct answers.

Reference Link: https://learn.microsoft.com/en-us/rest/api/storageservices/create-account-sas#specify-the-account-sas-parameters

Q35] You need to create an Azure Storage account that supports the Azure Data Lake Storage Gen2 capabilities.

Which two types of storage accounts can you use? Each correct answer presents a complete solution.

a. Premium block blobs

b. Premium file shares

c. Standard general-purpose v2

d. Premium page blobs

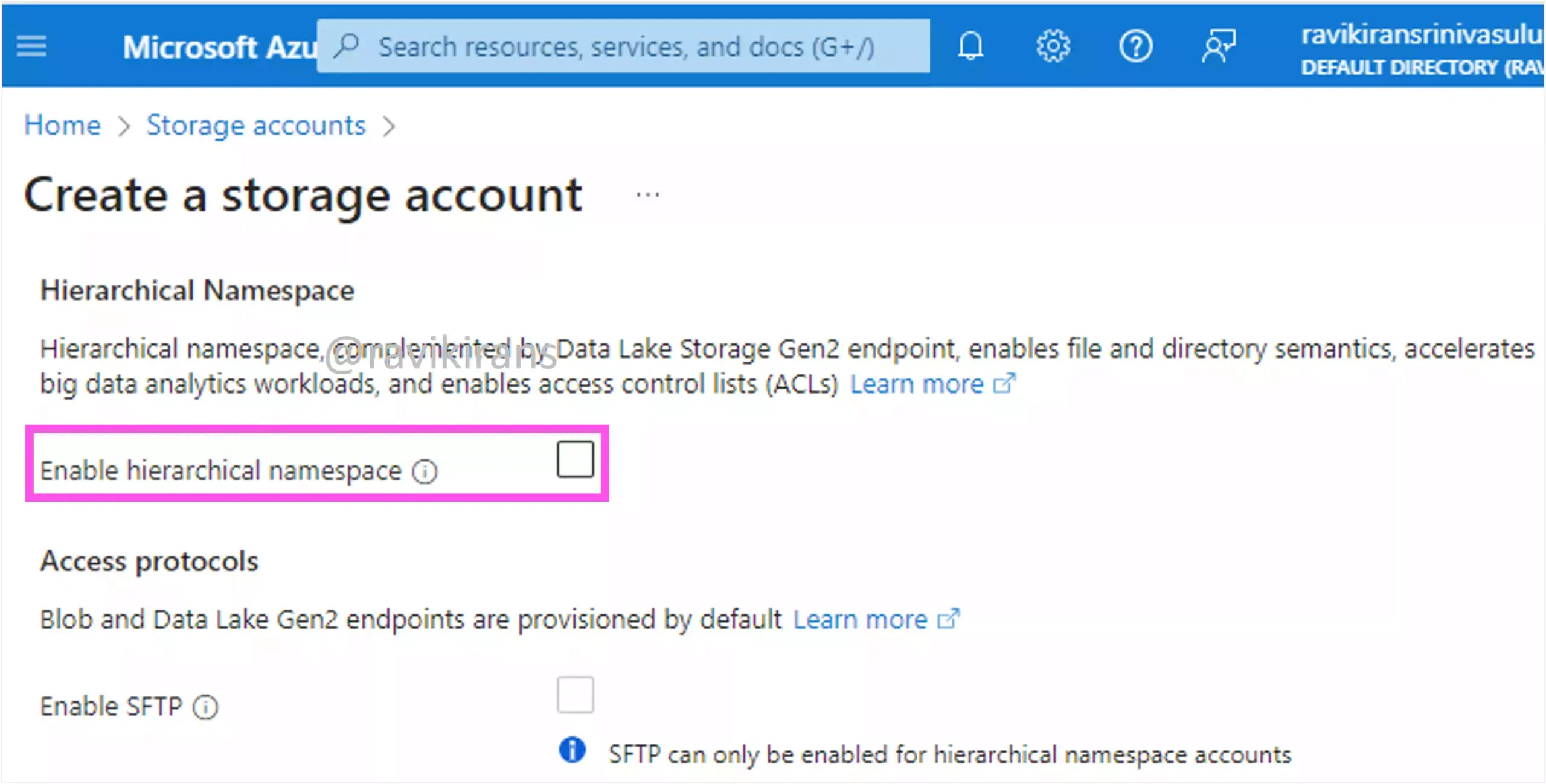

Data Lake Storage capabilities are supported in either the Standard general-purpose v2 storage account or the Premium block blob storage account.

To unlock Data Lake Storage capabilities in these types of storage accounts, select the Enable hierarchical namespace checkbox in the Advanced tab.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/blobs/create-data-lake-storage-account#choose-a-storage-account-type

Q36] You need to create an Azure Storage account that meets the following requirements:

1] Stores data in a minimum of two availability zones

2] Provides high availability

Which type of storage redundancy should you use?

a. Geo-redundant storage (GRS)

b. Locally-redundant storage (LRS)

c. Read-access geo-redundant storage (RA-GRS)

d. Zone-redundant storage (ZRS)

Zone-redundant storage replicates your storage account synchronously across three Azure availability zones in the primary region. Option D is the correct answer.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/common/storage-redundancy#zone-redundant-storage

None of the other storage redundancies replicate the data across availability zones. LRS replicates your data within a single data center.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/common/storage-redundancy#locally-redundant-storage

And GRS replicates the data to a secondary region.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/common/storage-redundancy#geo-redundant-storage

Q37] You have an Azure Storage account named storageaccount1 with a blob container named container1 that stores confidential information.

You need to ensure that content in container1 is not modified or deleted for six months after the last modification date.

What should you configure?

a. A custom Azure role

b. Lifecycle management

c. The change feed

d. The immutability policy

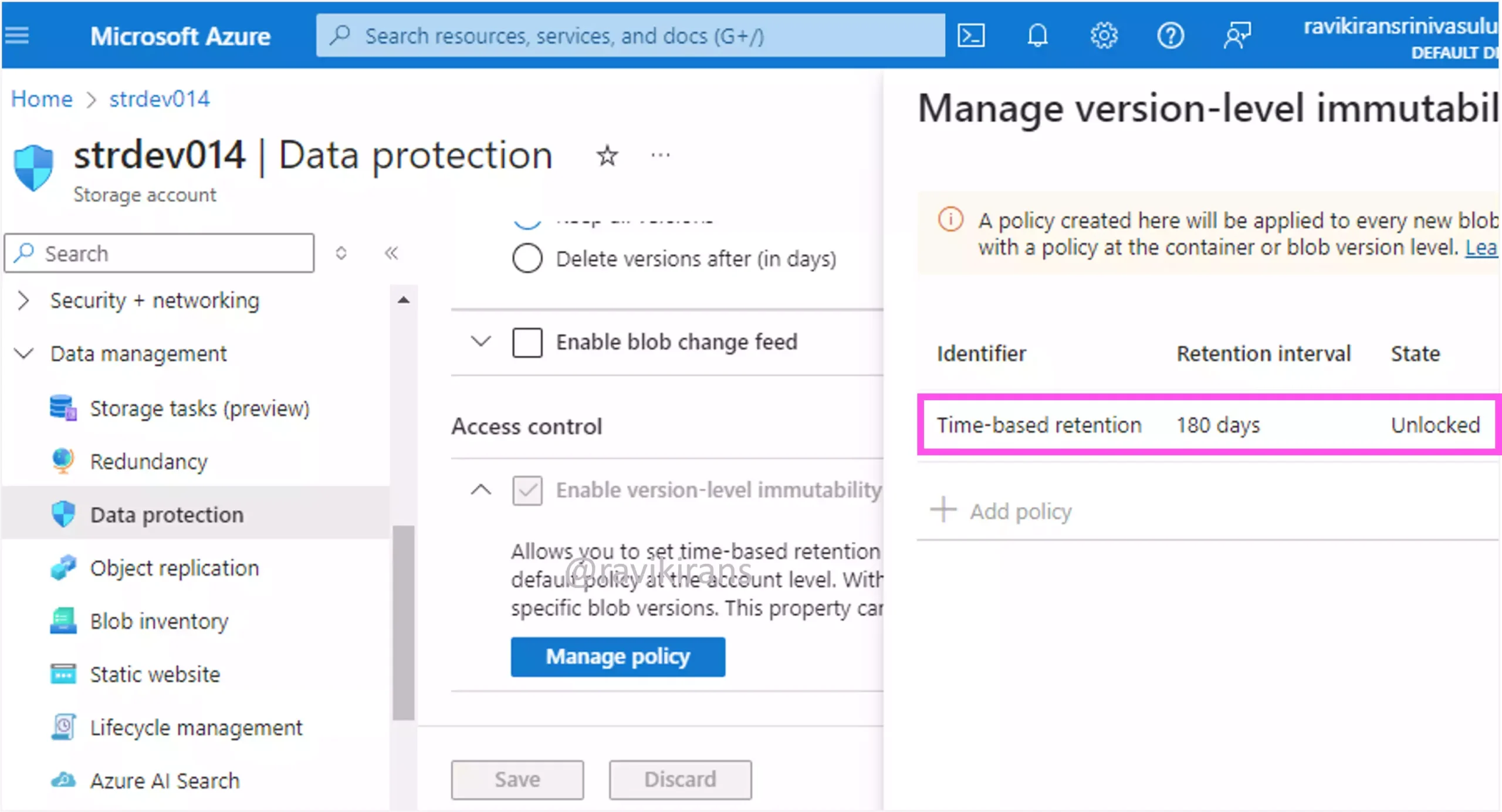

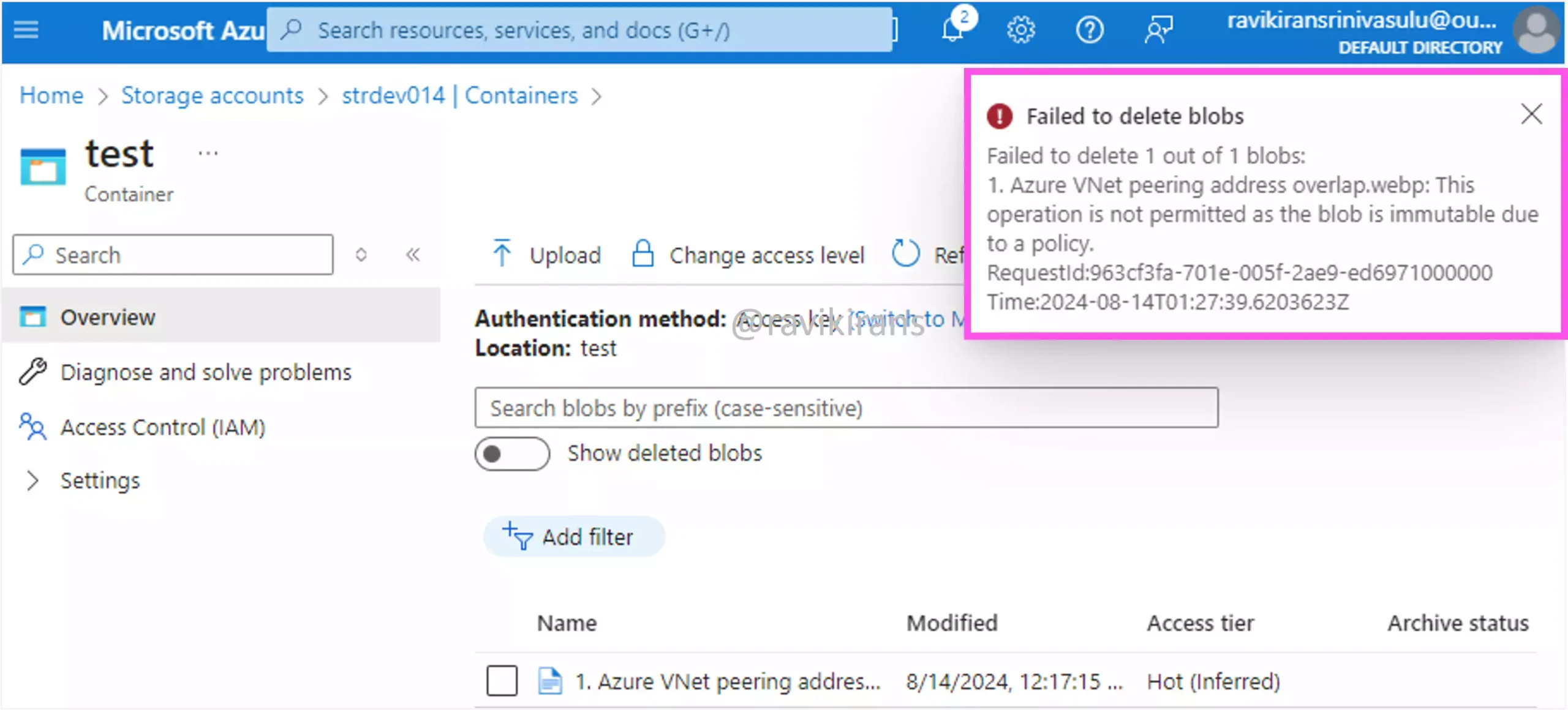

You can configure a time-based immutable policy in your storage account and set the Retention interval to 180 days.

This ensures that the content in the container can be created, but not modified or deleted for the retention duration.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/blobs/immutable-storage-overview

Option D is the correct answer.

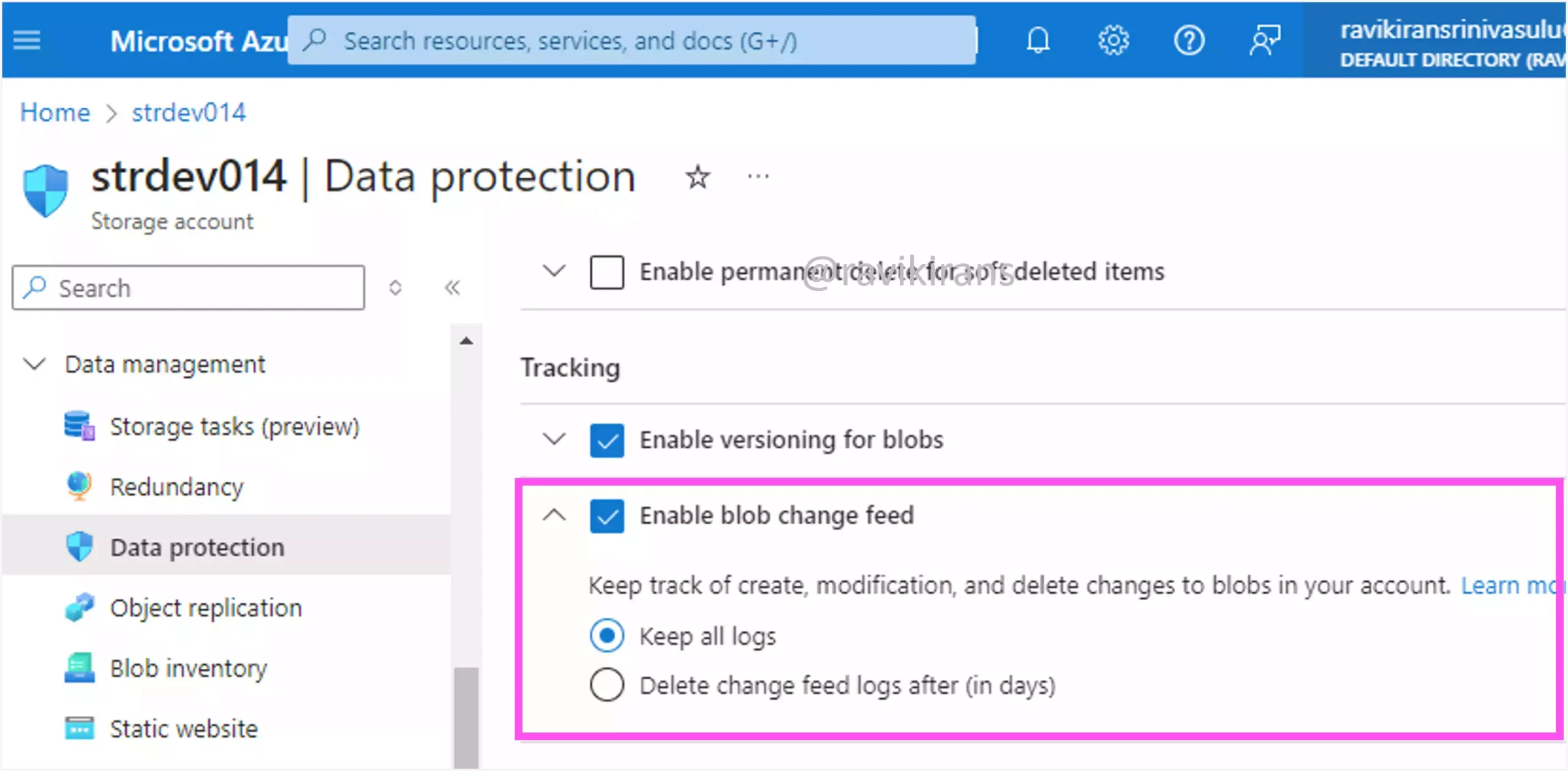

Change feed provides transaction logs of all the changes that occur to the blobs in your storage account.

Option C is incorrect.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/blobs/storage-blob-change-feed

Lifecycle management offers a rule-based policy to transition your data to other access tiers (hot, cold, archive) at the end of the data’s lifecycle. Option B is incorrect.

Reference Link: https://learn.microsoft.com/en-us/azure/storage/blobs/lifecycle-management-policy-configure

An Azure role, whether custom or in-built will grant/block certain permissions when assigned to the user. Although assigning a custom role to users can ensure certain behavior, you cannot set any retention period for a custom role.

Reference Link: https://learn.microsoft.com/en-us/azure/role-based-access-control/custom-roles

Q38] You have an Azure subscription that contains multiple storage accounts.

A storage account named storage1 has a file share that stores marketing videos. Users reported that 99 percent of the assigned storage is used.

You need to ensure that the file share can support large files and store up to 100 TiB.

Which two PowerShell commands should you run? Each correct answer presents part of the solution.

a. New-AzRmStorageShare -ResourceGroupName RG1 -Name -StorageAccountName storage1 -Name share1 -QuotaGiB 100GB

b. Set-AzStorageAccount -ResourceGroupName RG1 -Name storage1 -EnableLargeFileShare

c. Set-AzStorageAccount -ResourceGroupName RG1 -Name storage1 -Type "Standard_RAGRS"

d. Update-AzRmStorageShare -ResourceGroupName RG1 -Name -StorageAccountName storage1 -Name share1 -QuotaGiB 102400

To ensure the file share can support large files, use the Set-AzStorageAccount command with the EnableLargeFileShare parameter.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.storage/set-azstorageaccount#-enablelargefileshare

Option B is one of the correct answers.

The New-AzRmStorageShare command creates a new file share. Per the question, we need to increase the capacity of the existing file share. So, we do not need to create a new file share. Option A is incorrect.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.storage/new-azrmstorageshare

We can increase the storage capacity of the file share up to 100 TiB using the command Update-AzRmStorageShare. Option D is the other correct answer.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.storage/update-azrmstorageshare

There is no need to change the storage redundancy of the storage account to Read Access GRS. Option C is incorrect.

Q39] You have an Azure subscription and an on-premises Hyper-V virtual machine named VM1. VM1 contains a single virtual disk.

You plan to use VM1 as a template to deploy 25 new Azure virtual machines.

You need to upload VM1 to Azure.

Which cmdlet should you run?

a. Add-AzVhd

b. New-AzDataShare

c. `New-AzDisk `

d. New-AzVM

Even without knowing many PowerShell commands, questions like these can be easy to answer. A PowerShell command is composed of verbs like New, Add, etc., The verb New creates a new resource. The verb Add adds a resource to an existing resource or container.

Reference Link: https://learn.microsoft.com/en-us/powershell/scripting/developer/cmdlet/approved-verbs-for-windows-powershell-commands#new-vs-add

So, to upload a virtual hard disk from an on-premises machine to Azure, use the Add-AzVhd PowerShell command.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.compute/add-azvhd

Option A is the correct answer.

The PowerShell command New-AzVM is used to create a new virtual machine. Option D is incorrect.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.compute/new-azvm

The PowerShell command New-AzDisk is used to create a managed disk. Option C is incorrect.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.compute/new-azdisk

The PowerShell command New-AzDataShare creates an Azure data share within an existing data share account. Option B is incorrect.

Reference Link: https://learn.microsoft.com/en-us/powershell/module/az.datashare/new-azdatashare

Q40] You have an Azure subscription that contains a storage account named storage1.

You need to provide storage1 with access to a partner organization. Access to storage1 must expire after 24 hours.

What should you configure?

a. A shared access signature (SAS)

b. An access key

c. Azure Content Delivery Network (CDN)

d. Lifecycle management

You can create a Shared access signature with specific access to specific services in a storage account. You can also set when the access ends for the user using the SAS

Option A is the correct answer.

You cannot automatically expire access key access after 24 hours. All you can do is rotate the key manually, which is not a great solution. Option B is incorrect.

Check out my AZ-104 practice tests (with discount code).

Follow Me to Receive Updates on the AZ-104 Exam

Want to be notified as soon as I post? Subscribe to the RSS feed / leave your email address in the subscribe section. Share the article to your social networks with the below links so it can benefit others.