Welcome to the DP-700 Official Practice Test – Part 3.

In this part, I have given my detailed explanations of the 10 official questions from Microsoft. Unlike on the Microsoft website, the explanations include screenshots to help you prepare for the DP-700 exam.

That said, these tests are very simple, and they should only be used to brush up on the basics. The real exam would rarely be this easy. To get more rigorous practice and even in-depth knowledge, check out my practice tests (click the button below).

Once done, check out the DP-700 questions Part 4 and the accompanying DP-700 Practice Test video.

21] Your company has implemented a lakehouse architecture using Microsoft Fabric to support large-scale data analytics. The data engineering team needs to ensure that the data transformation processes are optimized for performance and reliability.

You need to oversee the data transformation processes to detect any bottlenecks or failures and enhance them accordingly.

What should you do?

A. Configure alerts for transformation failures.

B. Enable detailed logging for performance analysis.

C. Implement automated scaling for transformation processes.

D. Use basic monitoring tools without advanced configurations.

Well, the data transformation can happen either in Fabric pipelines or notebooks. Let’s assume the transformation happens in pipelines.

We can configure alerts for any transformation failures. However, Fabric doesn’t yet support built-in pipeline-level alerts like Azure Data Factory.

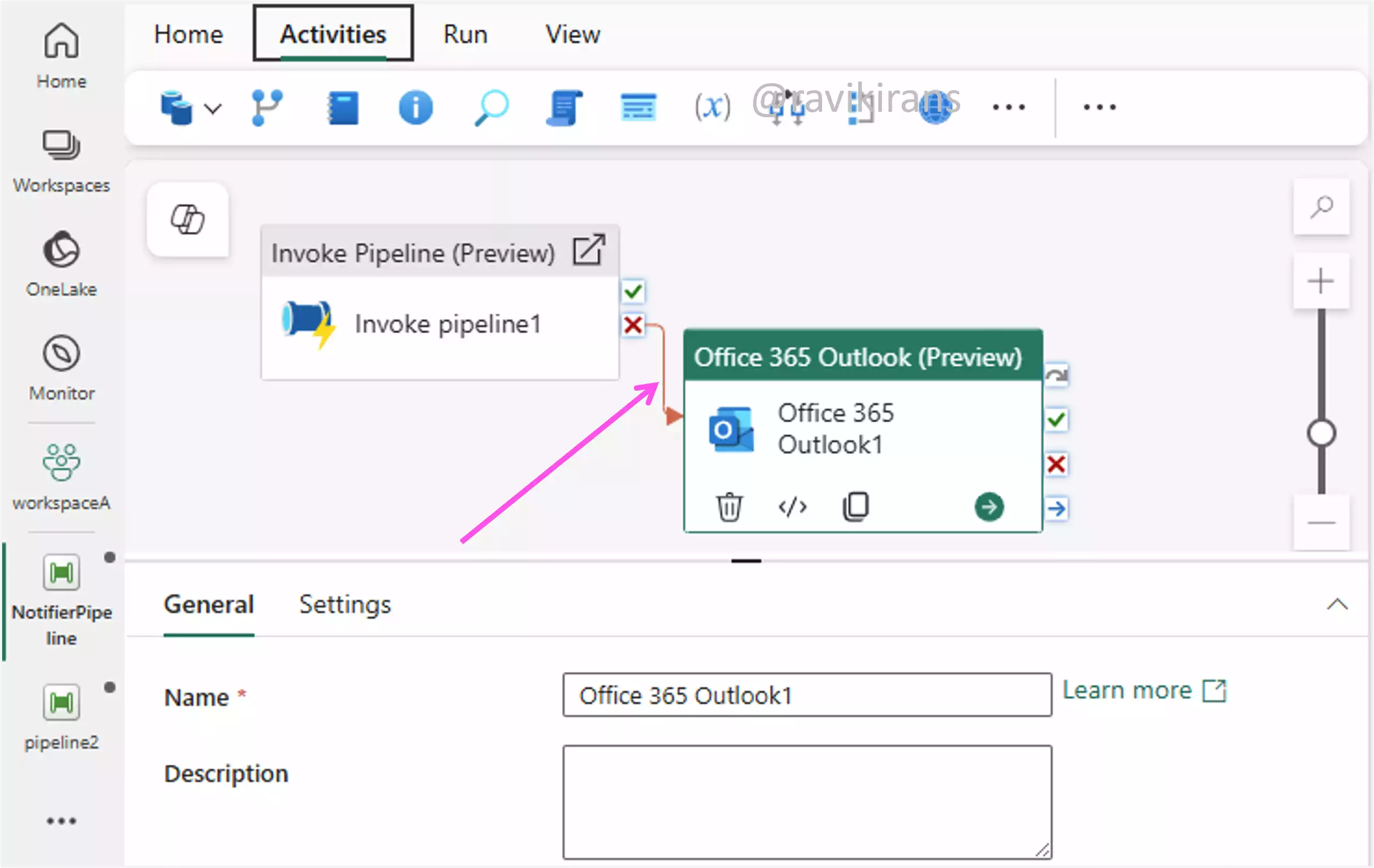

So the best way would be to use a wrapper pipeline that invokes a primary pipeline (which has the data transformation steps), attaching a Teams or Office 365 Outlook activity to the On-Fail path of this invoke activity. So whenever the main pipeline fails, the Teams/Outlook activity sends a notification with context/details.

Option A is the correct answer.

Option C is incorrect. Implementing autoscaling helps with tuning performance. It does not help with monitoring.

Depending on what the actual issue is, even basic monitoring tools (option D) and detailed logging (option B) can also be helpful. Due to limited information, we will declare them incorrect.

22] Your organization uses Microsoft Fabric to manage and monitor various data activities, including data pipelines, dataflows, and semantic models. Recently, issues with data ingestion processes failing intermittently have caused delays in data availability for reporting.

You need to determine the cause of these failures and ensure effective monitoring of data ingestion processes to prevent future disruptions.

What should you do?

A. Enable alerts only for non-critical issues.

B. Increase data ingestion frequency.

C. Use a basic logging tool for monitoring.

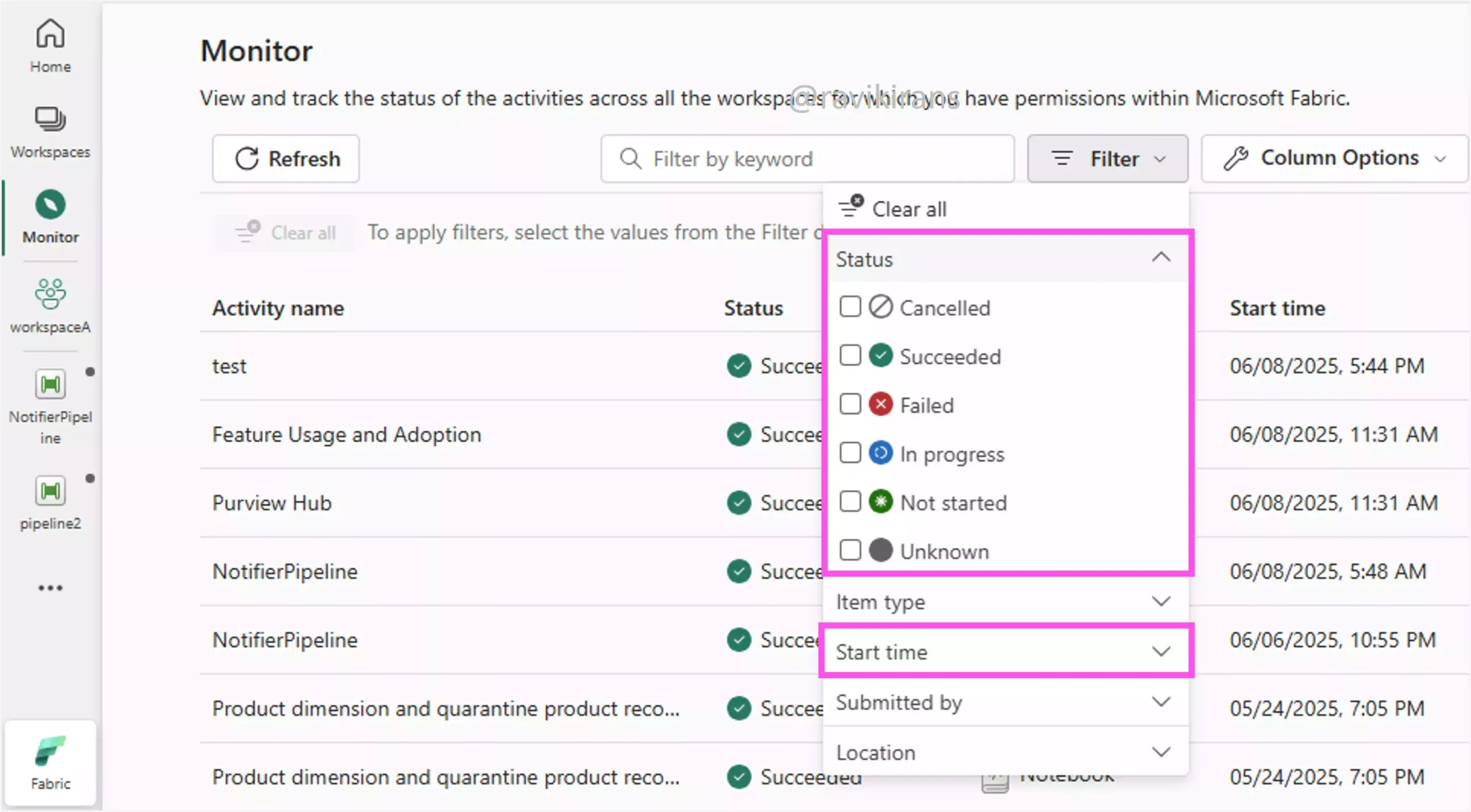

D. Use the Monitor hub to filter activities by status and start time.

Of all the given options, using the monitor hub to filter activities by status and start time is the best one. Option D is the correct answer.

Option A is incorrect. Well, alerts are required, especially for critical issues. Using them only for non-critical issues defeats the very purpose.

Option B is incorrect. Increasing data ingestion frequency worsens failures by straining resources without solving the root cause.

Option C is incorrect. The name ‘basic logging’ sounds like not the correct answer.

23] An organization has implemented Microsoft Fabric for data transformation processes. However, there have been instances where semantic model refreshes fail without notification, impacting business intelligence reports.

You need to set up alerts to monitor semantic model refresh events and receive notifications upon failures.

Each correct answer presents part of the solution. Which three actions should you take?

A. Configure a filter

B. Notify via Teams for all refresh events.

C. Select Fabric workspace item events in Real-Time hub.

D. Send an email for alert actions.

E. Set alerts for all refresh events without filtering.

F. Use a KQL Queryset to monitor refresh statuses.

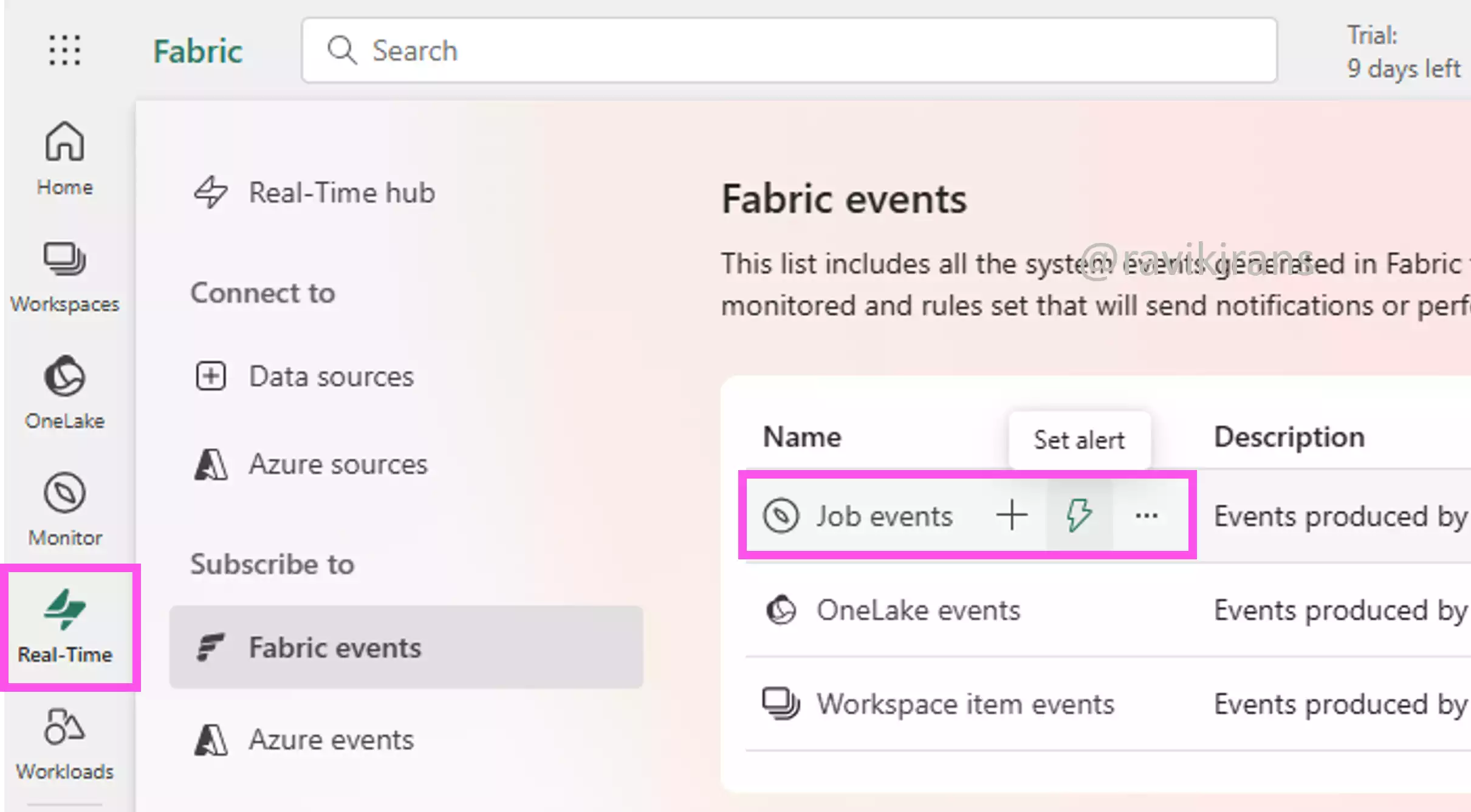

Well, I think the answer choice is slightly incorrect. To monitor semantic model refresh, we select job events, not workspace items events in Real-time fabric.

Reference Link: https://learn.microsoft.com/en-us/fabric/real-time-hub/fabric-events-overview?utm_source=chatgpt.com#use-job-events

So, option C is one of the correct answers, but it is job events, not workspace events.

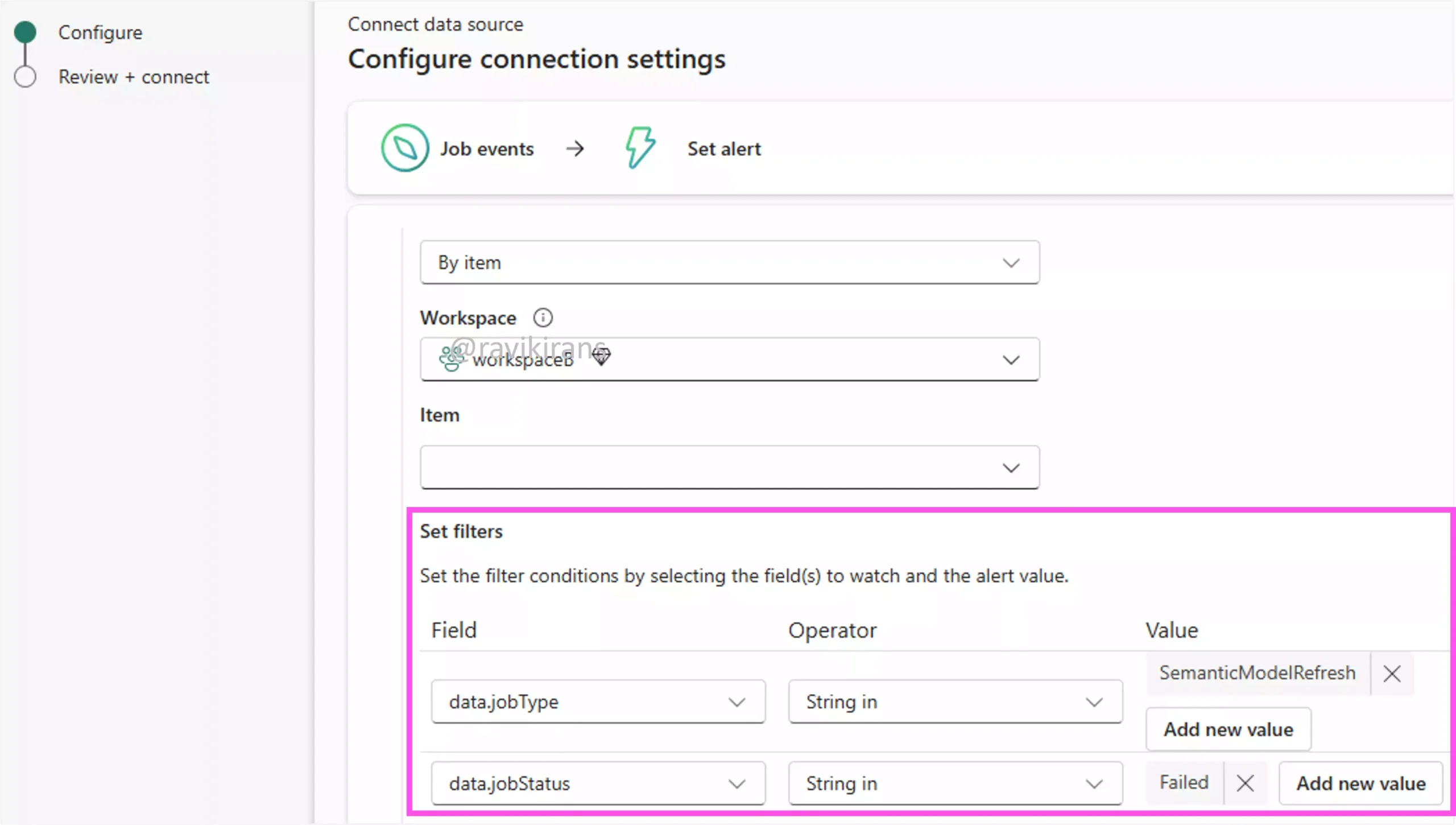

In the job events, create an alert. Click the source to open the pop-up box (shown below). In the alert, set filters for the job type and job status.

Option A is also one of the correct answers.

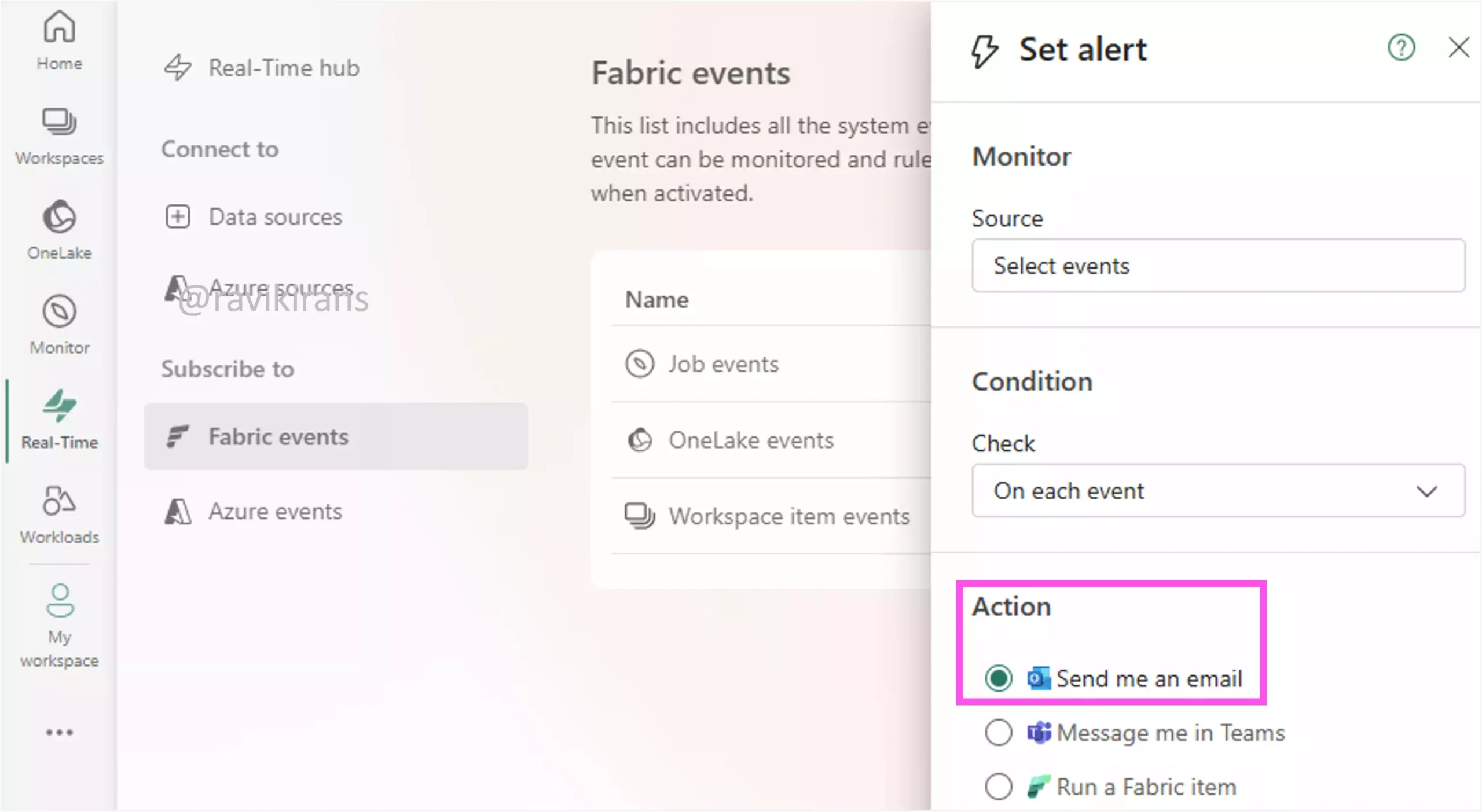

Finally, configure the action to send an email when the alert condition satisfies.

Option D is the last correct answer.

Option B is not suitable. Notifying for all refresh events is unnecessary and not required.

Option E is incorrect. Again, filtering is required only for the failed refreshes.

Option F is incorrect. Using a KQL Queryset to monitor refresh statuses is useful for detailed analysis, but not ideal for real-time alerts.

24] Your company uses Microsoft Fabric to manage data pipelines and has integrated Microsoft Power BI for reporting. The team experiences delays in report updates due to inefficient refresh schedules.

You need to optimize the refresh process without overloading system resources.

What action should you take?

A. Increase buffer time between refresh attempts.

B. Increase refresh frequency for all tables.

C. Increase refresh frequency for specific tables.

D. Refresh specific tables and partitions.

Inefficient refresh schedules are poorly timed or excessive refreshes that strain resources, delay reports, or miss timely data updates (stale data).

Option A is incorrect. The team already experiences delays. Increasing buffer time between refresh attempts could further delay report updates by extending wait times.

Option B is incorrect. Increasing refresh frequency for all tables will overload system resources.

Between options C and D, option D is better as it refreshes only specific partitions. Option C refreshes full tables even if only a portion of the data has changed. Option C is a better solution as it has partition-level efficiency.

25] Your organization uses Microsoft Fabric to manage data pipelines for processing sales data. Recently, a pipeline failed during execution, and the error logs indicate a missing data source in one of the Lookup activities.

You need to configure the pipeline to fail gracefully with a clear error message when such issues occur in the future.

What should you do?

A. Add a conditional check to handle missing data sources.

B. Add a Fail activity with a custom error message and code.

C. Log errors and continue pipeline execution.

D. Retry the pipeline execution automatically upon encountering missing data sources.

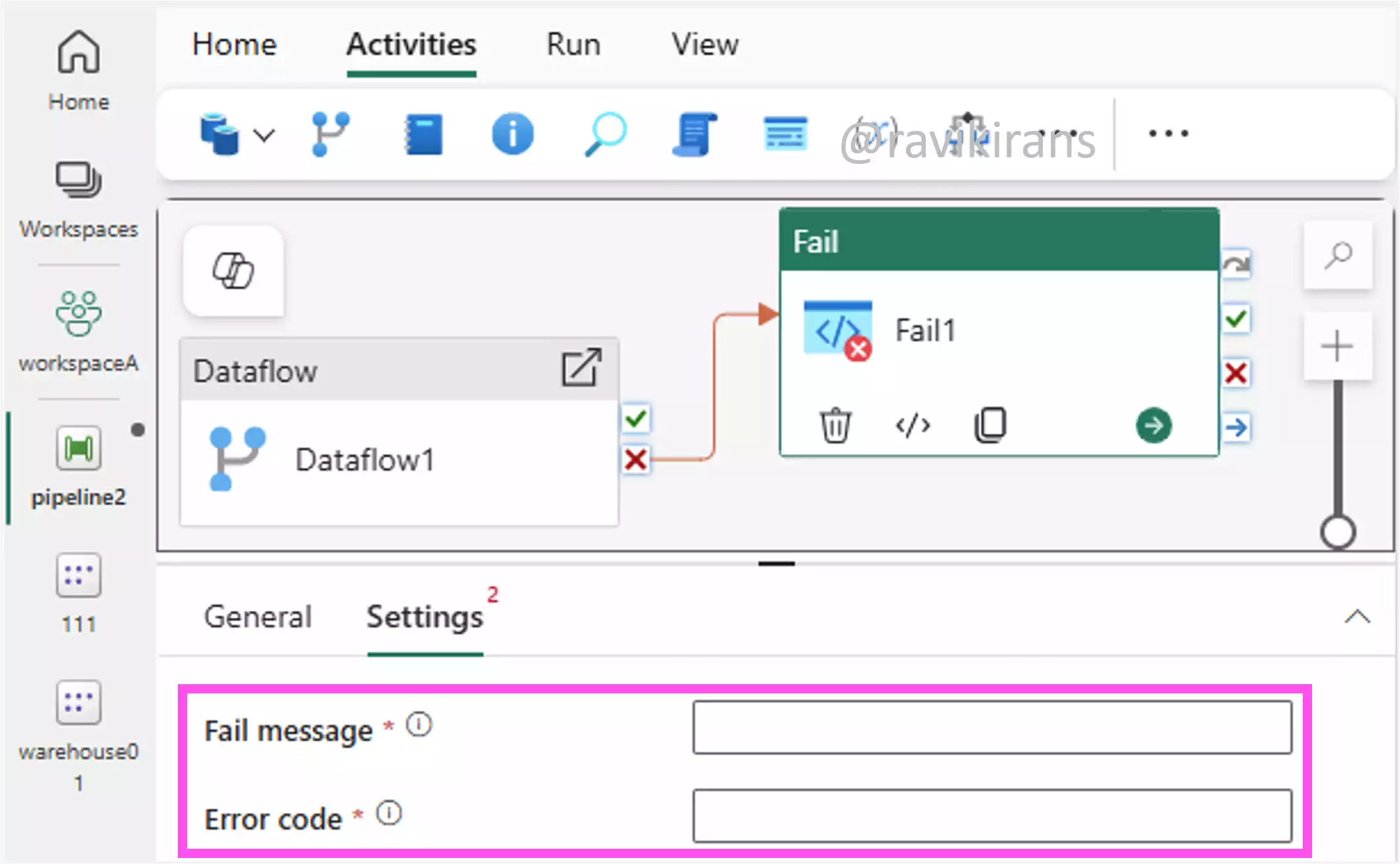

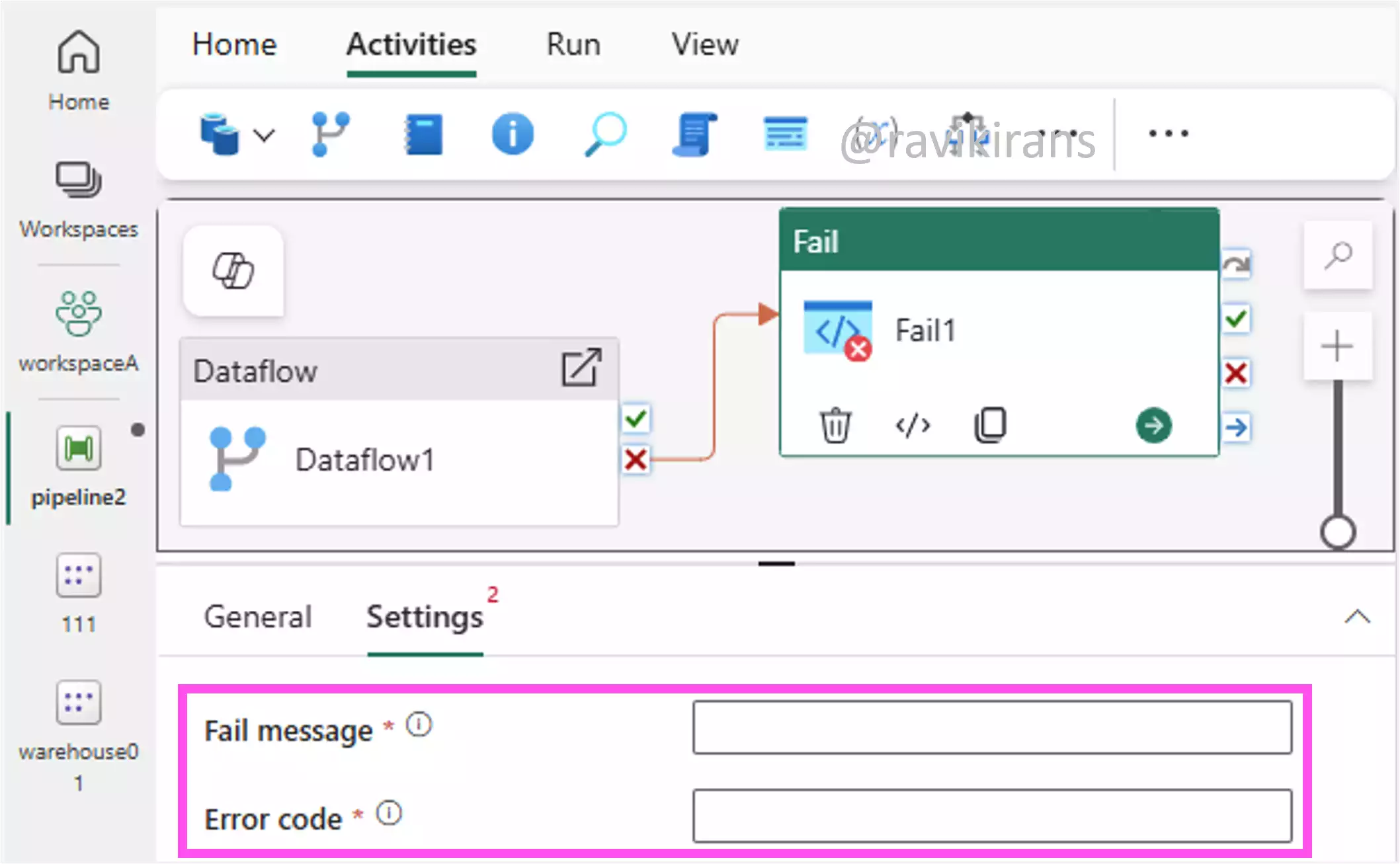

If you need to fail a pipeline gracefully, add a fail activity to the On-Fail path of the activity that you expect will fail. In the Fail activity you can enter a custom message and error code, giving more details to the user.

Option B is the correct answer.

Option A is incorrect. A conditional check can help with detecting issues, but without a Fail activity, the pipeline will continue to fail.

Options C and D are also incorrect. Logging errors and retrying pipeline executions will not enable the pipeline to fail gracefully.

26] Your organization uses Microsoft Fabric for streaming data from IoT devices. There are issues with data not being processed correctly, possibly due to errors in the eventstream engine.

You need to resolve the errors causing data processing issues.

What should you do?

A. Check for data conversion errors.

B. Check Runtime logs for error entries by severity.

C. Increase memory allocation for the engine.

D. Restart the engine to clear temporary issues.

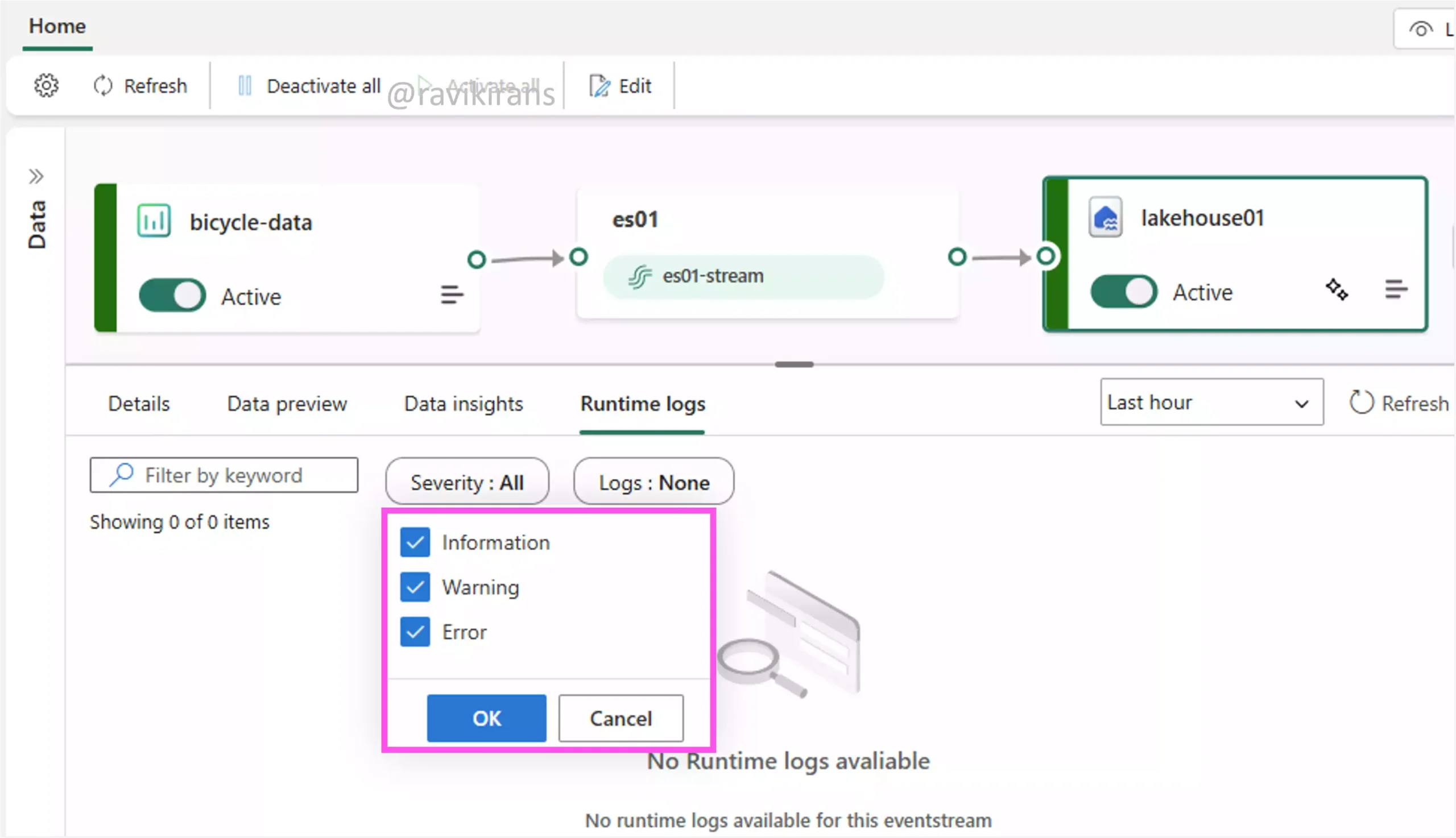

In the evenstream, you can check runtime logs, which help identify issues like schema mismatches or malformed events, understand why processing failed, and take corrective action, making it the most effective troubleshooting method.

Reference Link: https://learn.microsoft.com/en-us/fabric/real-time-intelligence/event-streams/monitor#runtime-logs

Option B is the correct answer.

Option A is incorrect. Checking for data conversion errors is useful but too narrow, as it may overlook other critical issues in the eventstream engine.

Option C is incorrect. This option assumes resource constraints are the issue. Unless you check the runtime logs, you cannot get to the root cause issue.

Option D is incorrect. Restarting the engine may offer a quick fix, but it doesn’t resolve root cause issues.

27] Your company uses Microsoft Fabric to manage data pipelines for real-time analytics. A recent pipeline execution failed due to a data conversion error.

You need to resolve the error to ensure successful pipeline execution.

What should you do?

A. Adjust data type mappings.

B. Ignore data conversion errors

C. Increase CPU allocation

D. Review Data insights for error metrics.

Option B is incorrect. If we ignore any error, we can never ensure successful pipeline execution.

Option C is incorrect. Throwing more resources at a problem will not magically fix the problem (data conversion errors).

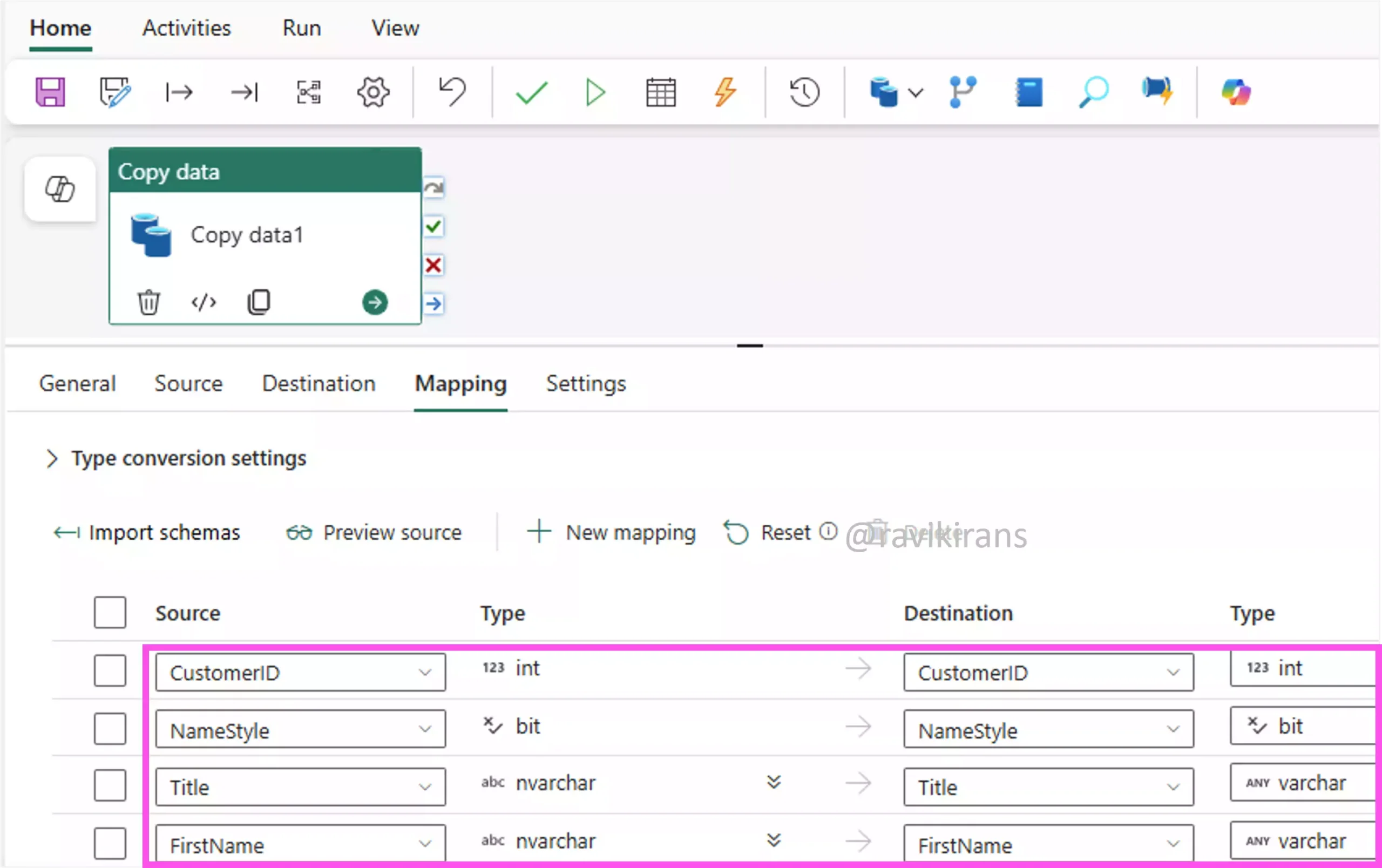

The answer to this question is divided between options A and D since the question does not provide detailed information. There can be many reasons for the data conversion error, for example, data type mismatch, null or missing values, incompatible formats (like date), truncation errors etc.,

Option A can fix the solution only if there is a data type mismatch between the source and the target.

For all other issues, reviewing the error metrics for the failed pipeline run might help.

28] Your company uses Microsoft Fabric to manage data pipelines for large-scale analytics. Recently, several pipeline executions failed due to errors in data transformation, causing delays in reporting and analysis.

You need to implement a mechanism that allows a pipeline to terminate with specific error details when conditions like missing data or internal errors occur.

Each correct answer presents part of the solution. Which three actions should you perform?

A. Add a Fail activity to the pipeline.

B. Configure the Fail activity with custom error details.

C. Create a new pipeline.

D. Use a Dataflow activity for error detection.

E. Use a Script activity for error handling.

F. Use a Wait activity to delay pipeline execution.

This question is very similar to question 25 in this blog. Per the understanding from the answer to question 25, we add a Fail activity to the pipeline and configure the fail activity with custom error details.

So, options A and B are the correct answers.

I do not think option C is correct, as we can add the Fail activity to the existing pipeline itself (as shown above).

Option F is incorrect as a wait activity only delays execution.

Option D is incorrect as dataflow activities focus on data transformation, not error detection, making them unsuitable for managing pipeline termination with specific error details.

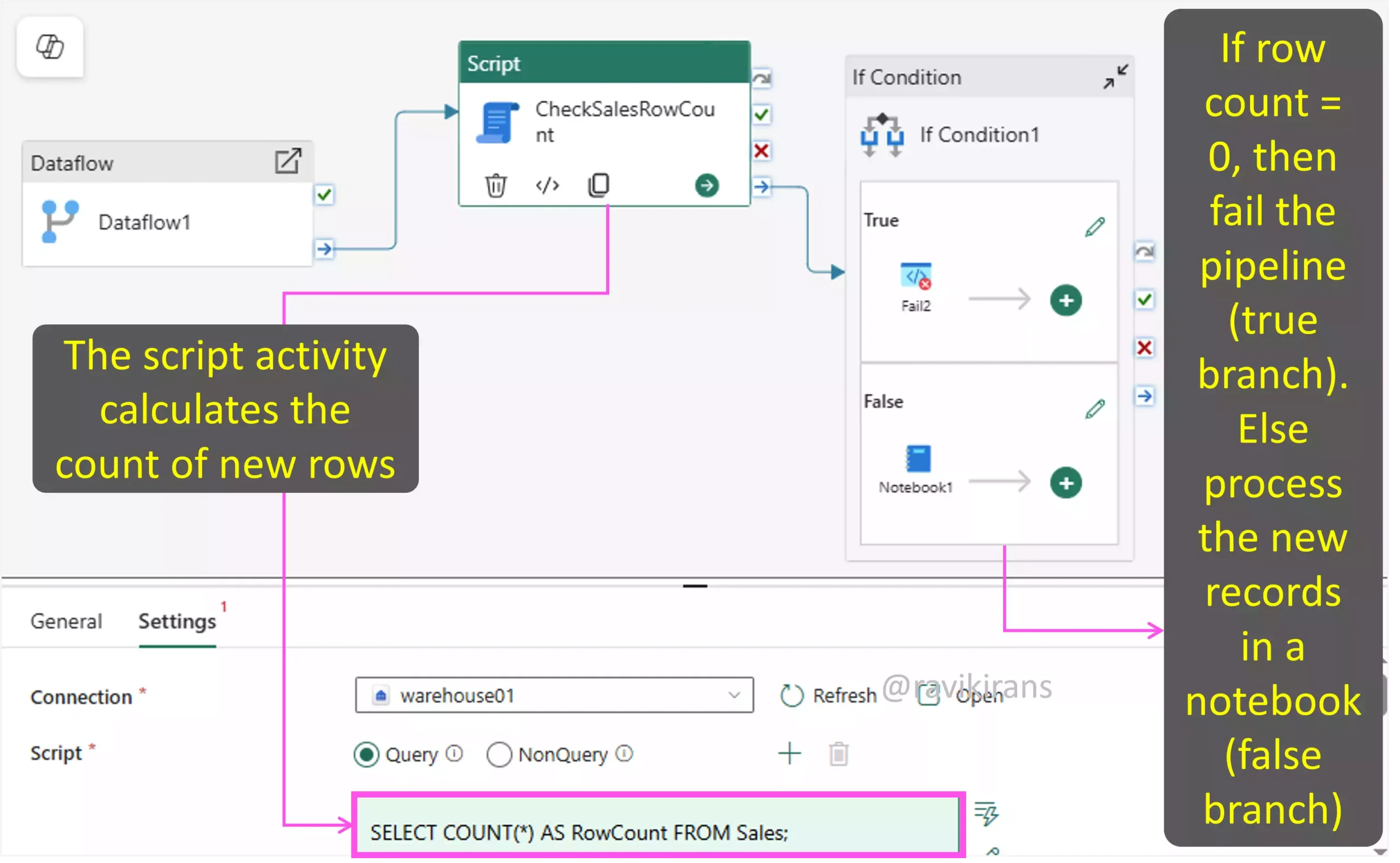

Since the question asks for three actions, we can try to fit in the script activity (option E) into an existing architecture as shown below. Since we need to detect conditions like missing or empty data, we can insert a script activity that calculates the count of new rows.

If the row count from the dataflow activity is 0 (a condition defined in the IF condition), then the pipeline fails with a Fail activity (as we did earlier). Else if the dataflow activity produces rows, then the notebook activity transforms the data before inserting it into the destination.

29] Your company uses Microsoft Fabric to manage data pipelines for a large-scale analytics solution. Recently, several pipeline executions failed due to errors in the Lookup activity, which returned no matching data.

You need to configure the pipeline to terminate with specific error details when the Lookup activity returns no matching data.

Each correct answer presents part of the solution. Which two actions should you take?

A. Add a Fail activity after the Lookup activity.

B. Add a Retry activity after the Lookup activity.

C. Add a Wait activity after the Lookup activity.

D. Configure the Fail activity with specific error details.

E. Use a Retry activity to handle the error.

Again, the explanation to this question is very similar to the explanation in question 25. To let the pipeline terminate with specific error details when the lookup activity returns no matching data, add a Fail activity after the lookup activity and configure the Fail activity with error details.

Options A and D are the correct answers.

Options B, C, and E are incorrect. Retries and waiting longer will not help if the data is not available.

30] Your company is using Microsoft Fabric to manage data pipelines for a lakehouse solution. Recently, a pipeline failed due to a script activity error.

You need to ensure that the pipeline execution fails with a customized error message and code.

Each correct answer presents part of the solution. Which two actions should you perform?

A. Add a Fail activity.

B. Configure custom error settings in the Fail activity.

C. Enable logging for pipeline activities.

D. Use a Try-Catch activity.

The explanation is the same as the explanations to many previous questions you learnt in this blog. Refer to question 25.

Check out my DP-700 practice tests.

Follow Me to Receive Updates on the DP-700 Exam

Want to be notified as soon as I post? Subscribe to the RSS feed / leave your email address in the subscribe section. Share the article on your social networks with the links below so it can benefit others.